Agentic Era Part 1. A Strategic Inflection Point Where Orchestration and Distribution - Not Model Power - Define AI Moats

The frontier AI race has entered a new phase—one defined not solely by model quality but by orchestration, distribution, and efficiency.

The frontier AI race has shifted from raw model power to orchestration, distribution, and efficiency, creating a Discontinuity moment for investors seeking asymmetric returns.

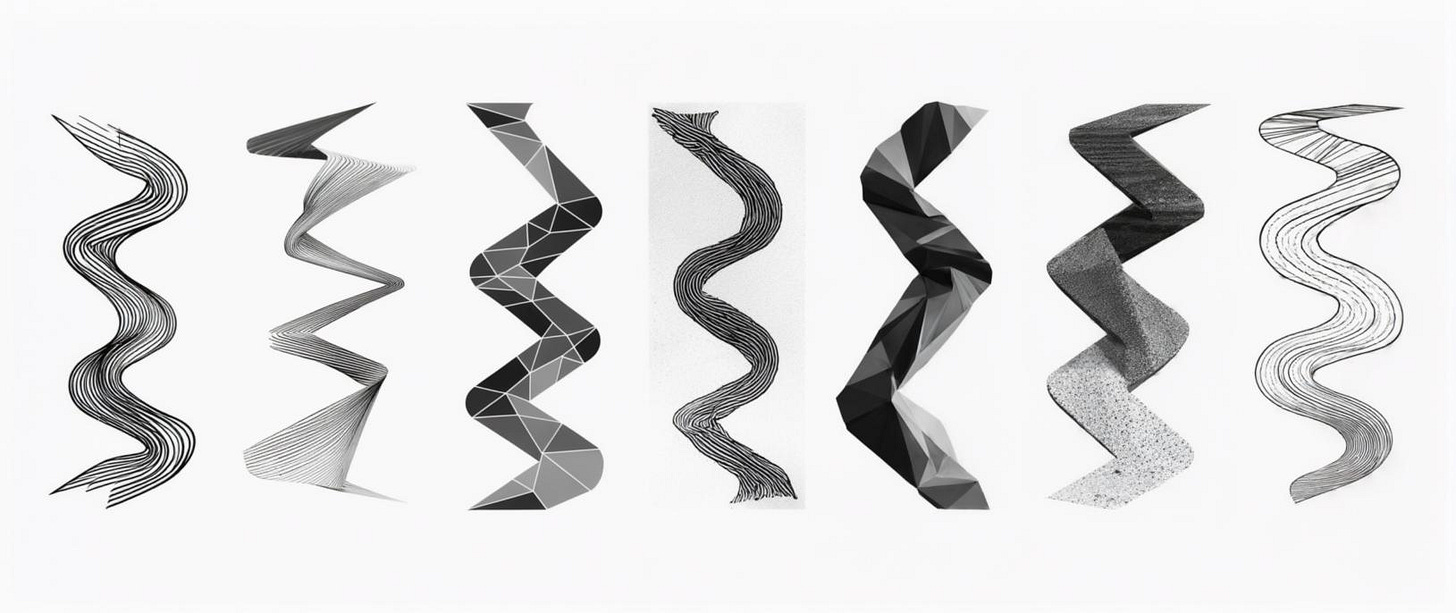

As benchmark scores converge—GPT-4o, Claude 3.7, and Gemini 2.5 cluster within 5% on MMLU—intelligence is commoditized, and orchestration layers like Anthropic’s MCP and OpenAI’s 1B-user ChatGPT interface define new moats. Seven players—OpenAI, Anthropic, Google, xAI, Meta, Mistral, and DeepSeek—pursue distinct strategies, from interface control to enterprise efficiency, visualized in Figure 1’s radar chart. Investors must prioritize firms building financial fundamentals anchored in these new moats —cash flows, margins, scalability—for DCF valuation, not revenue hype.

This is Part 1 of a short series analyzing this strategic inflection point and its implications for building durable businesses in what I'm calling the Agentic Era.

The foundation model race has entered a new phase—one defined not by model quality but by the fundamental restructuring of how intelligence translates to market power. This shift presents a classic discontinuity moment: traditional valuation frameworks are failing while new moats emerge at an accelerating pace. The race is still on, and I aim to provide a framework for thinking about where value could ultimately accrue.

The Inflection Point Is Behind Us

While the market fixates on headline-grabbing funding rounds and model launches, a fundamental strategic realignment is underway in AI. At The Information's Financing the AI Revolution summit this week, executives discussed xAI Holdings' $20 billion raise at a $120 billion valuation—the second-largest private funding round in history, surpassed only by OpenAI's $40 billion capital infusion last month. Meanwhile, Alibaba's pointed criticism of DeepSeek ahead of its latest Qwen model release underscores the intensifying competitive dynamics in the Chinese AI ecosystem.

Yet these massive fundraising events mask a critical shift: raw model capabilities no longer determine winners in foundational AI. The competitive frontier has moved decisively toward orchestration quality, creating asymmetric return opportunities for investors who recognize this discontinuity before market valuations fully reflect it.

While capital continues flowing toward model development, the true arbitrage opportunity exists in identifying which players are building defensible orchestration layers that connect model intelligence to distribution endpoints where actual value capture occurs. Those who can identify these orchestration winners stand to capture disproportionate returns as the market gradually recognizes this fundamental shift.

Orchestration Is the New Moat

The recent convergence in benchmark scores between leading models (GPT-4o, Claude 3.7, Gemini 2.5) reveals that basic intelligence is becoming commoditized.

Top models—GPT-4o, Claude 3.7 Sonnet, and Gemini 2.5 Pro—achieve near-identical scores (within ~5%) on MMLU and other benchmarks, reflecting commoditization of general intelligence. Additional players (Meta’s Llama, Mistral’s Large, DeepSeek’s R1, xAI’s Grok) show competitive but slightly lower scores, only reinforcing the trend.

What isn't commoditized—and should not be for the foreseeable future—is the orchestration layer: the complex systems enabling models to seamlessly access data, perform multi-step reasoning, and integrate into existing workflows.

Orchestration creates moats by embedding intelligence into user-specific applications, which are harder to replicate than model improvements. Anthropic’s Model Context Protocol (MCP), adopted by OpenAI and Google in Q1 2025, standardizes data-model integration, locking in enterprise users. OpenAI’s ChatGPT drives 500 million weekly users (or perhaps even 1 billion!) through intuitive interfaces.

This orchestration advantage manifests through several critical capabilities:

✅ Connection protocols establishing standardized interfaces between models and external systems, like MCP, adopted by OpenAI and Google in Q1 2025

✅ Agent frameworks guiding models through complex multi-stage reasoning processes

✅ Tool integration systems extending model capabilities through specialized services

✅ Interface design patterns translating raw intelligence into intuitive user experiences

The players who master these dimensions will capture disproportionate value regardless of whose model performs marginally better on the next benchmark.

The Orchestration-Distribution Nexus

Orchestration forms the moat, but distribution is the vector for its value. Orchestration ensures intelligence is delivered effectively—through seamless, user-specific workflows—creating defensibility that competitors struggle to replicate. Distribution, meanwhile, is the reach of that intelligence, leveraging existing channels to scale adoption. The most successful players combine orchestration advantages with established distribution vectors.

OpenAI's interface orchestration, delivered through Microsoft's distribution network, is more powerful than either component alone. Similarly, xAI’s orchestration embeds AI into Tesla’s vehicle fleet and X’s 500 million+ users, creating always-on availability. Anthropic’s MCP, adopted by OpenAI and Google, standardizes agentic workflows, enhancing orchestration across platforms. As Google DeepMind CEO Demis Hassabis noted, MCP is “rapidly becoming an open standard for the AI agentic era”. This orchestration-distribution nexus drives strategic divergence, rewarding specialized approaches over generic capabilities.

Strategic Divergence: Seven Distinct Paths

The frontier AI landscape has fractured into seven distinct strategies, each pursuing unique defensibility.

Driven by model commoditization, companies are building moats through orchestration, distribution, or efficiency, not raw intelligence.

We focus on seven prominent players—OpenAI, Anthropic, Google, xAI, Meta, Mistral, and DeepSeek—excluding others like Alibaba (Qwen) or Cohere for clarity. Unlike traditional markets where competitors converge, this rapid divergence creates asymmetric return potential as winners emerge.

OpenAI: Market Leader with Increasing Friction

OpenAI maintains a dominant market position through ChatGPT's 1B weekly active users and Microsoft's extensive distribution network. Yet vulnerabilities are emerging through interface stagnation and the retirement of their plugin ecosystem. Their dependency on Microsoft's infrastructure also limits strategic flexibility despite recent efforts to diversify cloud partnerships (e.g., Oracle).

The fundamental question isn't whether ChatGPT will remain popular, but whether OpenAI can maintain its interface advantage as competitors develop more flexible orchestration systems.

Anthropic: The Trust Premium

Claude has established a clear differentiation through its safety-first approach, creating particular resonance with enterprise customers seeking reliability over raw capabilities. Claude 3.7 Sonnet introduces a hybrid reasoning model that delivers both fast responses and careful consideration—a technical innovation with significant market implications.

With $14 billion in funding and strategic partnerships with Amazon and Google, Anthropic has secured distribution while maintaining independence. Also, its Model Context Protocol (MCP) is a key differentiator, thanks to its adoption by OpenAI and Google, establishing Anthropic as an orchestration leader.

Anthropic’s vulnerability could lie in scale: without a direct consumer presence, it risks becoming dependent on integration partners for distribution and market feedback.

Google: The Awakened Giant

Despite pioneering much of the fundamental research enabling the generative AI revolution, Google initially struggled to translate technical advantages into product leadership.

Gemini 2.5 Pro, integrated across Search, Android (2B+ users), and Workspace, leads in multimodal tasks like image reasoning and coding, per Alibaba’s Qwen3 tests (April 2025). In a recent post, Alberto Romero explains how Google is “winning on every AI front.”

Google's infrastructure capabilities—built on custom Tensor Processing Units (TPUs)—provide meaningful cost and performance advantages, while its data access creates natural orchestration opportunities through personalization.

Its primary vulnerability remains organizational coordination challenges between research excellence and product execution. Organizational misalignment between DeepMind and product teams is a known issue, delaying Gemini’s market impact.

xAI: The Hardware Integration Play

Elon Musk’s xAI embeds AI into Tesla vehicles and X’s 500 million+ users, creating ambient intelligence.

While Grok's model performance may not lead benchmarks, its ubiquitous presence creates a different form of defensibility—one based on passive integration rather than active interface superiority. The question isn't whether Grok is the most intelligent model, but whether its integration advantages create sufficient value to overcome technical limitations.

Meta: Ecosystem Over Ownership

Meta’s Llama 4 bets on open-source scale, with 1B+ downloads and 170K+ GitHub stars. Llama 4 Scout’s 10-million token context window and Maverick’s multimodal capabilities showcase technical excellence, but monetization via WhatsApp/Instagram integration is unclear.

The bet is that value will accrue through integration with Meta's core platforms, but the path to capturing that value remains unclear.

Mistral: Technical Excellence Seeking Application

French startup Mistral has emerged as a technical leader, delivering high-performance models like Codestral and LeChat that excel in high-value domains such as coding and enterprise efficiency. By focusing on precision over breadth, Mistral has carved out a premium niche, earning credibility with clients like BNP Paribas, Axa, and the French Ministry of Defense in Q1 2025. These partnerships signal early enterprise traction, positioning Mistral as a trusted partner in Europe’s AI ecosystem.

While Mistral’s orchestration capabilities and distribution channels lag behind giants like OpenAI or Google, its capital-efficient approach and open-source models, such as Mixtral, are fostering a growing developer community. To capture greater value, Mistral must strengthen its user-facing systems or secure high-profile integrations. Mistral represents today a technically superior player with the potential to scale into a leading enterprise AI provider if and only if it leverages its European foothold effectively.

DeepSeek: The Efficiency Disruptor

DeepSeek has fundamentally reset economic expectations through innovative engineering approaches, delivering GPT-4 quality at a fraction of the computational cost. Its "mixture of experts" architecture and reinforcement learning techniques represent genuine architectural innovation rather than mere scale advantages.

By releasing R1 under MIT licenses, DeepSeek is following Meta's ecosystem play but with a critical efficiency advantage. While export controls on advanced semiconductors may limit global scaling, this approach could prove decisive in markets with computational constraints, potentially creating an asymmetric advantage in emerging economies.

The Fundamental Metrics Shift

Traditional benchmarks like MMLU do not tell the full story, capturing intelligence but not market power or financial value. With GPT-4o, Claude 3.7, and Gemini 2.5 converging, operational and financial metrics—tied to orchestration—define defensibility:

• Interface retention: User stickiness within orchestration environments (OpenAI's 1B weekly users)

• API value density: Revenue per computation rather than raw usage volume

• Embedded distribution: Integration depth within existing platforms and workflows

• Developer ecosystem: Community size and contribution velocity (Meta's 170K+ GitHub stars)

• Task automation rate: Success percentage on complex multi-step processes

• Efficiency ratio: Performance relative to computational investment

These metrics reflect orchestration’s role in practical defensibility. Interface retention (e.g., OpenAI’s users) depends on user-friendly orchestration layers. Revenue per AI task measures how orchestration drives profitable API usage, as seen in Anthropic’s Claude. Complex task success rate captures orchestration’s ability to handle multi-step workflows, critical for enterprise adoption.

Scores range from 1 (weak) to 10 (exceptional), based on qualitative and quantitative evidence from April 2025 data. Note that the scores presented here are based on our proprietary analysis and reflect only the author’s view.

Discounted Cash-Flow (DCF) valuation demands predictable cash flows, high margins, and low churn, unlike AI’s compute-heavy costs. Google’s 2004 IPO ($106m profit on $962m revenue) showed scalable margins, a benchmark that AI firms will have to meet.

As an example, OpenAI’s $12.7B 2025 revenue projection (from $3.7B in 2024) ignores negative margins ($5B losses) and a modest share of paid users (20m per recent information, i.e., 4% on the basis of 500m weekly users). The Information recently reported on revenue projections of $125B by 2029 with an estimated gross margin of 70%, but we were not provided with unit economics behind those figures.

Yes, frontier AI companies, like any business, will ultimately be valued on their ability to generate free cash flow, not just revenue. Investors must develop conviction now that these firms can build defensible moats through the metrics above, ensuring they thrive in a DCF-driven future.

When Raw Power Still Matters

While orchestration and distribution have become primary differentiators, model capabilities remain relevant in specialized domains where precision is paramount. Breakthrough innovations in reasoning (like OpenAI's O-series) or multimodal understanding (like Gemini 2.5) could still create meaningful advantages in sectors like healthcare, finance, and specialized enterprise applications.

The critical insight isn't that model quality doesn't matter, but that it matters primarily when translated through effective orchestration into distinct user value. Raw intelligence without orchestrated application increasingly represents unmonetized potential rather than market advantage.

Beyond model quality, regulatory shifts (e.g., EU AI Act enforcement) or competition for AI talent could reshape the landscape, particularly for smaller players like Mistral or DeepSeek.

The Investment Implications

We're witnessing a classic discontinuity moment—where traditional valuation frameworks fail but where the greatest investment alpha is generated. For investors navigating this landscape, four key questions determine potential returns:

✅ Value Translation: How effectively does the company convert model intelligence into user-specific applications?

✅ Distribution Leverage: Does the strategy leverage existing channels or require building new ones?

✅ Efficiency Balance: Does the approach optimize both performance and computational economics?

✅ Strategic Resilience: Can the model withstand competitive responses and regulatory evolution?

Companies that convincingly address these questions—balancing user value, scalable channels, cost discipline, and regulatory agility—will build the financial fundamentals required for DCF-driven valuations, capturing outsized returns as the market matures.

Investors must act swiftly to identify these moat-builders before valuations fully reflect their defensibility. The AI revolution may be new, but the financial domain remains timeless: only those firms with robust fundamentals will thrive in the long run, delivering the cash flows that justify today’s bold bets.

The Path Forward

The frontier AI market is entering a phase of strategic crystallization where winners and losers will be determined not by benchmark superiority but by moat construction. Orchestration quality—not raw model intelligence—will increasingly determine market leadership.

By 2027, expect consolidation as orchestration leaders acquire or outpace smaller players, while hardware integration and efficiency redefine the competitive landscape.

For institutional investors, the arbitrage opportunity exists in identifying which companies are building orchestration advantages that markets haven't yet fully priced. The convergence in model capabilities creates a false perception of commoditization that obscures the emerging moats in orchestration, distribution, and efficiency.

The companies that turn intelligence into distribution will capture the majority of value in this next phase of frontier AI development. Everything else is just noise.

About Decoding Discontinuity

Generative AI represents a Discontinuity. In this environment, static defenses fail. What's needed is a dynamic approach that assesses not just current advantages, but resilience and adaptation potential in the face of technological discontinuities. Enter the Durable Growth Moat.

My work bridges the gap between cutting-edge AI research and real-world business impact, enabling higher returns through actionable insights. Want to talk about GenAI and Discontinuity? Just reach out.