Three Years In: GenAI's Discontinuity Proven in Silicon, Unproven in Revenue

How the market's split verdict reveals we're exactly where discontinuities always pause before their exponential phase.

Three years after ChatGPT’s launch, the market has rendered a split verdict on the generative AI generation.

Traditional software companies are hemorrhaging value. Infrastructure and compute have captured trillions in value. Hyperscalers are deploying +$400 billion annually in CapEx with methodical conviction. Productivity gains are nascent. New revenue streams - not counting hyperscalers - are still absent.

Is the market confused? Are hyperscalers spending too recklessly? Where is the revenue that justifies this infrastructure build-out?

What might look irrational can be understood through a critical lens: Discontinuity.

The absence of exponential returns and transformation of these markets is not evidence of failure, but rather the reality that we are precisely in the phase of discontinuity where doubt precedes the exponential curve. Recognizing why requires an understanding of discontinuity as an analytical lens.

Discontinuities don’t follow linear adoption curves. But they do exhibit a specific pattern. In the early stages, infrastructure is built during maximum skepticism, laying a foundation. Capability is proven in constrained domains. Economic transformation is delayed while new frameworks replace old ones.

These are often happening beneath the surface, giving the appearance of stagnation. It is only when all these pieces are in place and aligned that adoption gathers pace and then exponential acceleration seems to suddenly occur.

Three years after the release of ChatGPT, we are still in that early phase. These actors, each in their own way, have recognized the fundamental pattern of technological discontinuity that has been unleashed by AI. There is a systemic re-allocation of capital to the foundational infrastructure pieces needed for the exponential future.

That discontinuity ultimately isn’t just generative AI (text, images, chat). It is autonomous systems orchestrating complete workflows, capturing value from labor markets rather than IT budgets, in tech and in the real world. It is potentially one of the greatest value migrations in history.

If it happens.

Expecting such a transformation so fast is about as absurd as experts who thought brick-and-mortar retailers would be gone by 2001. But given that discontinuity is not linear, what we can seek to understand are the signs that help us to decode where we are along the journey toward that exponential economy of the future.

Doubt Appears Before the Curve

When I wrote my original Discontinuity thesis in August 2024, I said:

“Though critics like to mock Silicon Valley when bubbles emerge, tech leaders have proved to be remarkably prescient about what will happen. What they tend to get wrong is when it will happen.”

The philosopher of science Gaston Bachelard described “epistemological rupture,“ moments when progress requires abandoning old frameworks entirely. During these ruptures, there is a necessary period where old categories of thought fail, but new ones haven’t fully formed.

As I’ve followed the AI sector intensely over the past three years, I am struck by how many people still don’t seem to grasp how profoundly different this wave is compared to past ones. They have been through previous stages of technological disruption, and so they see this as just the latest in a long spectrum of changes that require them to be agile and adaptive.

But it’s important to differentiate between disruption, where the curve of change shifts, and discontinuity, where the curve breaks completely. Before I dive back into benchmarks and data points, consider this thought exercise from the perspective of a CIO to understand how the generative and agentic AI wave is different from other recent technology cycles.

When cloud computing disrupted on-premise software, companies could respond by rebuilding their products as SaaS offerings. When mobile disrupted desktop computing, companies could create mobile apps and responsive interfaces. The transition was difficult, expensive, and many incumbents failed.

But in both cases, a company moved from one piece of software with a defined set of features and workflows to a new platform with a defined set of features and workflows. The path forward was visible. The strategies that worked in the previous era could be adapted. The metrics that mattered before still mattered, just with different coefficients.

That is disruption.

Now consider how this looks with generative and agentic AI.

Companies are not being given a piece of software out of the box or a plug-and-play SaaS platform with pre-defined features and workflows. They are being handed a tool and being told, “Here, imagine what you can do with this!” They have gone from a world of clear rules to a universe of infinite choices and are told to chase their dreams. Nothing in their experience, either as managers or developers, has prepared them for such a moment. Nothing in the history or culture or structure of their business has been built for this. They have no mental models to guide them.

This is Discontinuity.

Is it so surprising, then, to learn in the report last summer from MIT that only 5% of enterprises were reporting any ROI from their initial GenAI projects? If they took these new tools and tried to insert them into existing workforce structures and infrastructure using old playbooks expecting quickly to save a few bucks or even reinvent their company, failure and frustration were given. They had not truly recognized the need to reset their mental framework, at the most atomic level at just about every aspect of their company. As the study’s authors noted, the failing was not “driven by model quality or regulation, but seems to be determined by approach... Most fail due to brittle workflows, lack of contextual learning, and misalignment with day-to-day operations.”

In other words, they are currently in that gap that Bachelard identified.

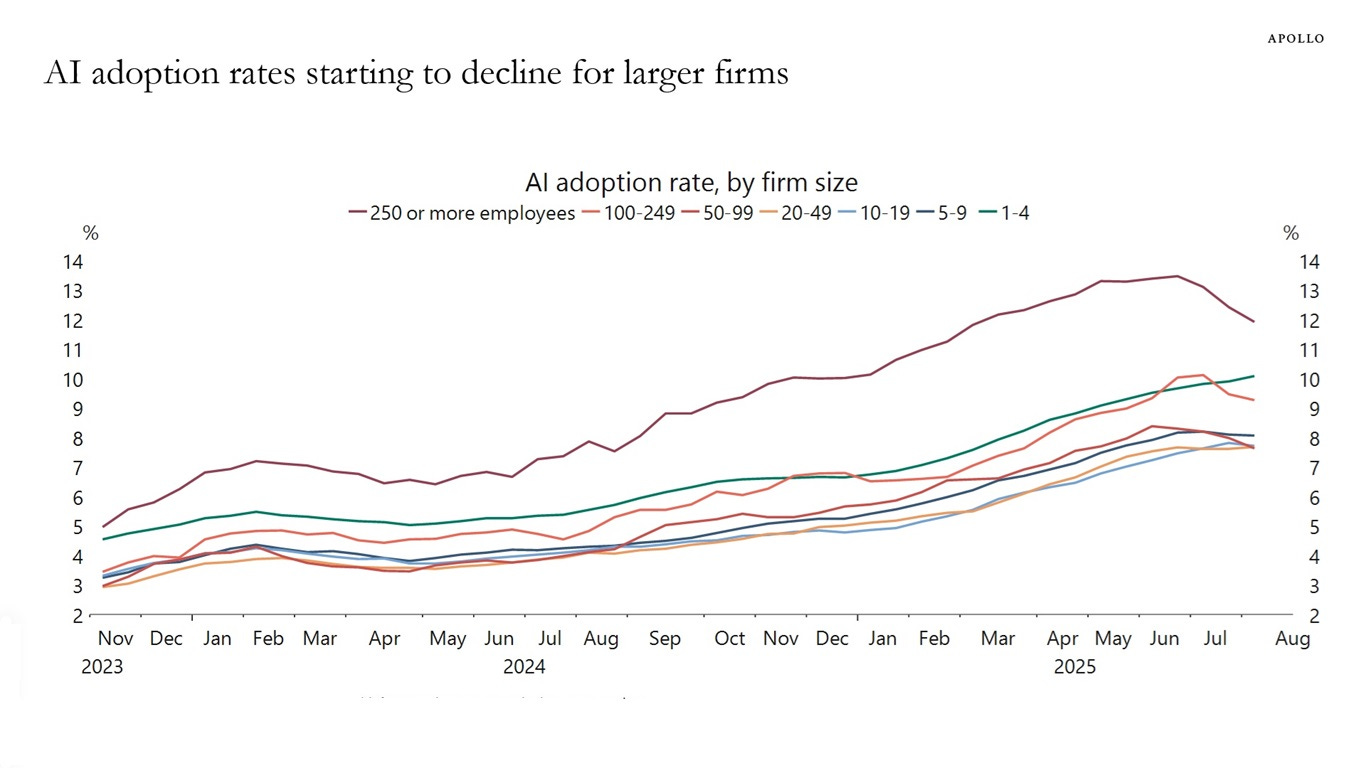

As a result, we see that recent data from Apollo Academy shows AI adoption rates flattening across all firm sizes in the US. The narrative has shifted from unbridled optimism to measured concern: Is the AI boom stalling? Has the market overshot?

Figure 1 – AI adoption rate by firm size per Census Bureau (source: Apollo analysis, Torsten Sløk)

Gloom and disappointment have a way of compounding.

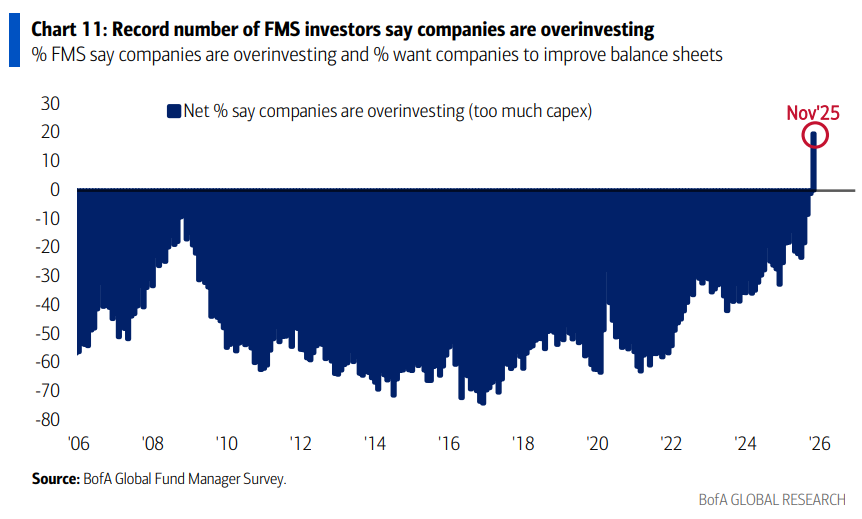

For the first time since the 2008 financial crisis, fund managers believe companies are over-investing. According to BofA’s November 2025 Global Fund Manager Survey, the net percentage of fund managers saying companies are deploying too much capital has swung from deeply negative (indicating under-investment concerns) to positive for the first time in nearly two decades. This represents peak skepticism about the hyperscaler buildout.

Figure 2 – BofA Survey on capex (source: BofA)

Caught in between are legions of traditional software companies trying to navigate both the discontinuity and market expectations.

Adobe is down 37% over the past 12 months. Salesforce is down 29% over the past 12 months. ServiceNow, among the more resilient, is still down 22%. And yet, those are among the strongest names in enterprise software, and all three have been swift to deploy generative AI.

Meanwhile, AI “adopters,” in tech and non-tech, are delivering strong shareholder value per Morgan Stanley. Morgan Stanley projects AI-driven efficiency will contribute an incremental 30 basis points and 50 basis points to S&P 500 (SP500) net margins in 2026 and 2027.

Wal-Mart CEO Doug McMillon, for example, has been aggressively talking up the company’s investments, publicly stating that AI would completely transform every aspect of how the company operates. Although he believes the company’s headcount of 2 million would stay the same, he expects the nature of every role to change profoundly, and the company along with it.

The company’s challenge now is to figure out how to do it by re-examining everything. One of the new roles: An “agent builder” for creating AI tools to help merchants. “It’s very clear that AI is going to change literally every job,” McMillon said. “Maybe there’s a job in the world that AI won’t change, but I haven’t thought of it.

Last month, Walmart announced it would move its stock listing from the NYSE to the tech-heavy Nasdaq. Its stock is up 23.4% YTD.

Of course, we have plenty of recent lessons that should offer some guidance here about the need to expect longer timelines. Consider the internet’s adoption curve. In 1995, 14% of US adults used the internet. Then came the dot-com crash in 2000. The consensus declared the internet’s promise overblown and the revolution over.

What followed wasn’t stagnation but true exponential growth. By 2005, two-thirds of Americans were online; by 2022, 66% of humanity. The global user base exploded from 45 million in 1996 to 5.3 billion by 2022.

Throughout this period of maximum skepticism, infrastructure continued to be built out. Yes, there was dark fiber. Then broadband came to the home. Then 3G and 4G mobile networks. And then widespread adoption of consumer wi-fi. These coalesced to become the foundation for the internet economy of the 2000s.

The dot-com survivors, such as Amazon, Google, and eBay weren’t those with the most capital or the best technology of 1999. They were those whose architecture anticipated an environment that didn’t yet exist. Their structures were pre-adapted for the selection pressures that were coming.

When dealing with true Discontinuity, the scale of change is so massive that timelines are impossible to predict. So many pieces must align before exponential potential is unleashed. When it happens, the speed of change is startling.

This pattern repeats. Doubt appears. The plateau is a feature of discontinuities, not a signal of failure. Old categories of thought become obsolete; new frameworks haven’t fully crystallized. By all indications, we are on that plateau now.

To be clear, no one can say with any certainty that we will ever move beyond it. But because we know the discontinuity pattern repeats, we can also map out what signals to watch that might indicate that we are starting to move along this new curve toward (hopefully!) this new world of exponential growth.

Signal 1: Infrastructure + Trillions of Value Migration

Let’s start here because the numbers are unambiguous. Capital reallocation is occurring on a historic scale. Hyperscalers Amazon, Microsoft, Google, and Meta are projected to spend close to $400bn on Capex in 2025 alone, a figure that represents a near-doubling from 2023 levels. This should continue in 2026.

There are certainly critics who think the people in charge have, to put it gently, lost touch with reality. But let’s step back and remember that whatever missteps that may have made here or there over the past 25 years, these are among the most disciplined capital allocators in corporate history, with decades of demonstrated returns on infrastructure investments.

No one, particularly the CEO of a publicly traded company, should be above criticism. But given their track records, they have also earned at least some benefit of the doubt here.

The beneficiaries of this spending so far are clear. NVIDIA hit a peak of about $5 trillion in market capitalization in October, though the colossus is being attacked from all fronts even though financial fundamentals show Nvidia is killing it. AMD, Broadcom, and a constellation of infrastructure players have seen similar trajectories. And the massive data center build-out has continued.

The risks are massive. Estimates of trillions of dollars in infrastructure still needed. Increasingly complex financial leverage. Massive energy and water demands. Physical and regulatory constraints. The compute these companies claim they need will not happen overnight.

Signal 2: Capability

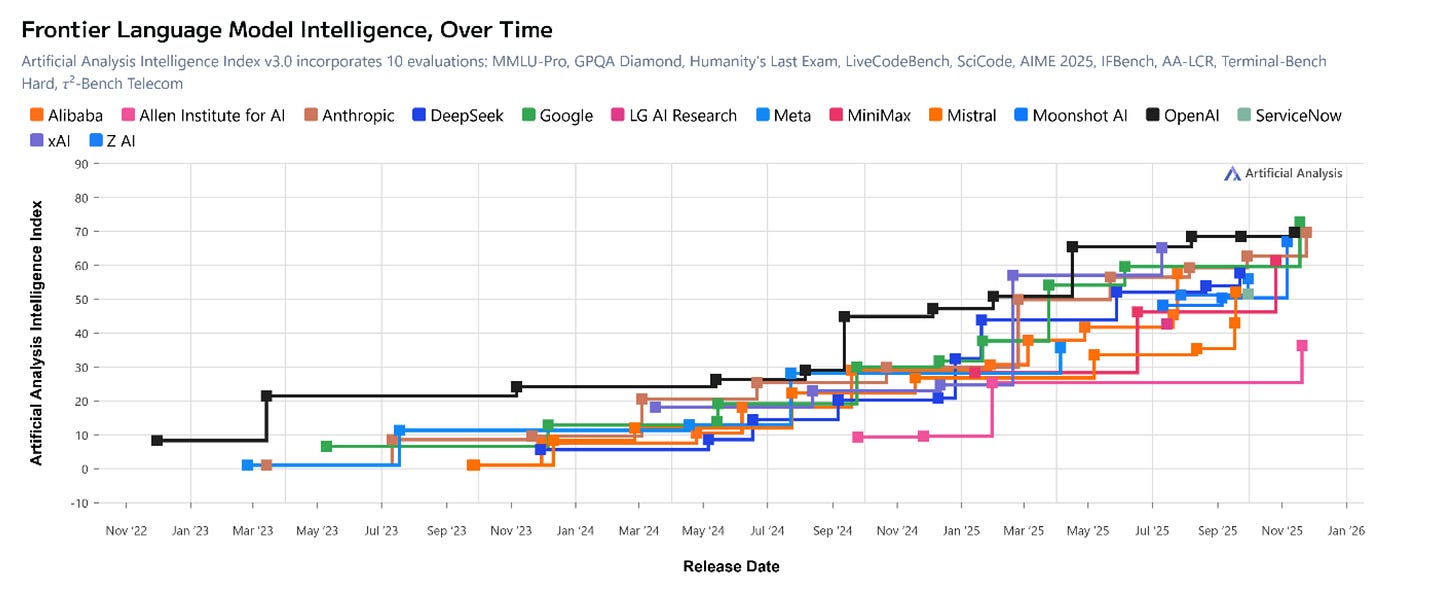

The rapid advancement of model capabilities is also beyond dispute.

This isn’t just about doing existing things 10% better. We’re talking about capabilities that didn’t exist three years ago now working on production scale. Three years is a short period of time.

Figure 3 – Frontier Language Model Intelligence (source: Artificial Analysis)

As Ethan Mollick noted last week when testing Gemini 3 three years ago, AI could describe a concept. In 2025, AI can architect a solution, write the code, build the interface, and deploy a functional application autonomously. Is this incremental? No, this is a qualitative transformation in what is possible.

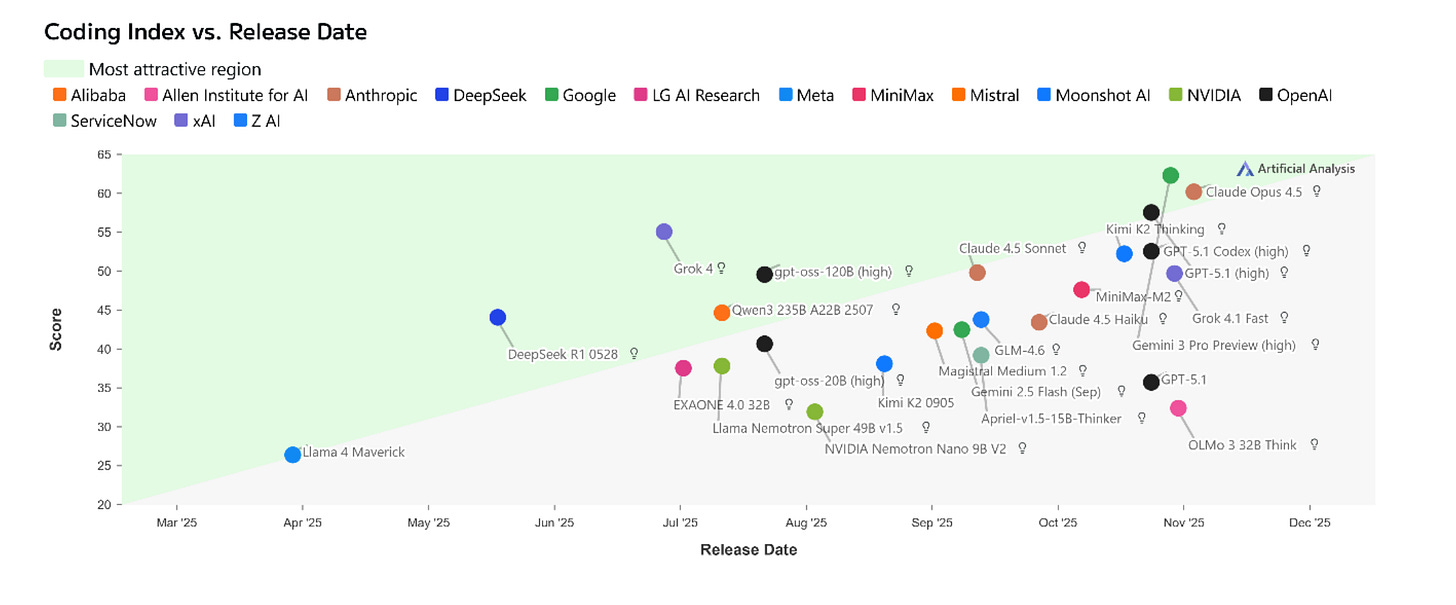

Importantly, this capability is proven and reproducible across multiple vendors. It’s not one model, one company, or one approach. Frontier performance has converged: multiple models achieve 65-75% on SWE-Bench, multiple coding tools are capturing enterprise revenue, and the Coding Index is trending higher and higher in just a few months.

Figure 4 – Coding Index vs. Release date (source: Artificial Analysis)

And the leaderboard positions are changing fast: as of today, Gemini 3 Pro preview and Clause Opus 4.5 are the highest intelligence models, followed by GPT-5.1 and GPT-5 Codex per Artificial Analysis. Just a couple of weeks ago, when GPT-5 was released, this was still only a possibility.

The progression is accelerating in a way fundamentally different from previous AI winters. The gap between capability announcement and production deployment has been compressed from years to months. GPT-4’s initial capabilities rolled out to a limited set of users over several months in 2023 with tight restrictions, and then gradually expanded to more general availability over the next year. In sharp contrast, the day Google announced Gemini 3, it was available globally, already baked right into many of its most widely used products.

This acceleration in deployment pace signals that infrastructure, integration frameworks, and enterprise adoption patterns are already in place. The system is ready to absorb new capabilities the moment they appear.

This lays the groundwork for the Agentic Era, which is when we will start to unlock the Orchestration Economics that will deliver exponential growth.

Let’s take a look at some benchmarks that point towards this increased evidence.

PhD-Level Reasoning as foundational capability

First, Humanity’s Last Exam (HLE). This benchmark matters for predicting production agentic capability, much more than text generation quality. HLE is a collection of problems from competitive mathematics and science competitions that requires sustained reasoning across multiple steps, identifying which tools to use when, backtracking from dead ends, and synthesizing approaches from different domains. These are precisely the cognitive primitives required for autonomous workflow orchestration in business environments.

In recent breakthroughs, Kimi K2 Thinking achieved 44.9% on HLE (text-only tools), surpassing GPT-5’s 41.7% and Claude Sonnet 4.5 (32%). Gemini 3 achieved comparable results. More importantly, these models achieved this performance while maintaining reliability across hundreds of sequential tool calls - demonstrating sustained autonomous operation at scale.

This matters because HLE performance predicts the capacity to decompose complex goals into executable plans, adapt those plans based on environmental feedback, and persist toward objectives across hundreds of discrete actions. As I noted in my analysis of second-wave AI systems, this represents “more reliable and capable autonomous agents“ that can engage in deliberate, logical reasoning and adapt to their computational approach based on problem complexity.

Kimi continues to push the boundaries here.

SWE-Bench Verified tests whether AI agents can autonomously fix real bugs in actual production codebases in repositories with millions of lines of code, complex dependencies, and integration requirements. The agent must understand the codebase, identify the bug’s root cause, implement a fix that doesn’t break other functionality, and validate the solution. Kimi K2 Thinking scored 71.3% on SWE-Bench Verified.

BrowseComp tests whether agents can autonomously navigate the open web to find and synthesize information, research tasks that consume massive knowledge worker time. Kimi K2 achieved 60.2%, more than double the human baseline of 29.2%.

The combination of SWE-Bench (structured) and BrowseComp (unstructured) demonstrates general autonomous capability across the spectrum of coding environments. Again, another huge leap forward in a relatively short time span.

Though I’ll caution that benchmarks are still just benchmarks. Helpful for tracking capabilities and progress. But these giant performance leaps have to translate to production environments. This is yet another indicator worth watching closely.

Signal 3: The Economics

Perhaps the most dramatic enabler is inference cost reduction.

According to Stanford’s 2025 AI Index Report, the cost of querying a GPT-3.5-equivalent model dropped from $20.00 per million tokens in November 2022 to $0.07 per million tokens by October 2024, a more than 280-fold reduction in approximately 18 months. Continued cost declines at current rates would eliminate cost as a primary barrier to scaling within 12-24 months, enabling enterprises to deploy AI at production scale without prohibitive compute budgets.

Combine that with the rise of open-source frontier models reaching performance parity with closed state-of-the-art LLMs – and even surpassing them by some benchmarks, as we saw with Kimi 2 Thinking – and we see yet another lever being pulled that chips away at costs.

Finally, the stunning release of Gemini 3 by Google last month has flipped the competitive economics by introducing a frontier model fully developed by a full-stack competitor that has the potential for very different inference costs and distribution reach.

All of these things have the potential to accelerate adoption, and yet they are occurring so fast that it’s unlikely that most enterprise leaders have truly had time to absorb the implications. But when it comes to things like agents, such dramatic drops in costs radically lower the risks of experimenting.

Signal 4: Adoption/Deployment

For all the talk of AI backlash and disillusionment, the numbers on the ground tell a different story. A new report from Gartner projects that AI application software investments (CRM, ERP, and other workforce productivity platforms) will more than triple between 2024 and 2026 to $270 billion. During that same period, AI infrastructure software spending (app development, storage, security, and virtualization tools) is forecasted to increase from $60 billion in 2024 to $230 billion.

Software spend will accelerate to 15.2% in 2026, per Gartner. But what is interesting is “out of that stunning 15.2% growth in software spending, roughly 9% is just inflation. That leaves about 6% for actual new spending.” And that inflation is expected to largely come from price increases tied to AI features.

The report is not an outlier. Survey data shows 88% of organizations regularly use AI in at least one business function, but only 23% scaling them (i.e., deploy agentic systems), according to McKinsey. The gap between adoption and scaling is stark. But the 23% figure still represents significant acceleration.

Again, this is perfectly normal given where we are in agentic AI today. Let’s recall that Anthropic’s Model Context Protocol (MCP) is barely 1 year old. (How fast are things moving? In the MCP announcement, Anthropic mainly refers to “AI Assistants” rather than “agents.”) But that hasn’t stopped some critics from deciding it’s not too soon to declare agentic AI a bust. Still, there is a reason multi-agent systems are not a reality today.

Despite this short timeframe, we are rapidly getting clarity on how and where agents work, offering a critical view of how to implement them in a collaborative way to achieve short-term cost savings and productivity gains. As a recent Carnegie Mellon and Stanford University study found, AI agents complete “readily programmable” tasks 88.3% faster at a 90.4% - 96.2% lower cost than humans. The key is for organizations to determine what those tasks are and structure the right human-machine hybrid collaborations to achieve those gains.

Meanwhile, as is common with any new technology, more powerful use cases are emerging. While it may not be the “killer app” in the same way that the spreadsheet was for the first PCs, the “coding wedge” is turning out to be a powerful demonstration of how the right combination of capability and cost can drive deployment.

Software engineering is where autonomous agents have crossed the reliability threshold for production deployment. Consider the example of Kimi K2 Thinking.

Coding capabilities are so robust that leading LLMs Anthropic and OpenAI are focused on the “coding wedge” as a way to win developers, and in doing so, using that as leverage to win enterprises.

But the value of coding as a use case is widely recognized.

GitHub Copilot reports that AI-assisted code represents 40%+ of total code written by users. Major tech companies report internal developer productivity gains of 30-50% from agentic coding tools in production.

The Information reports that Cursor, an ai-native code editor, is raising a new round of funding at a $30 billion valuation.

Software engineering is the first domain to cross the reliability threshold. As such, it’s the template for future use cases.

Coding requires understanding complex structured information (codebases), reasoning about system behavior (dependencies), using tools precisely (compilers, debuggers), iterating based on feedback (test failures), and maintaining context across long sequences. These capabilities generalize to legal document analysis, financial modeling, and medical diagnosis. Basically, any domain involving structured reasoning and tool use.

Moving Off the Plateau: Orchestration Economics

The doubt in the market stems from a simple question: Where is the revenue that justifies a $300B annual build-out?

The answer requires abandoning Traditional Economics for Orchestration Economics.

To grasp the implications of the world that these companies envision with the tools and infrastructure they are creating requires moving past old categories of thought. The parameters of this future world are not always easy to describe when trying to map them against the present day.

In a recent podcast, Tesla CEO Elon Musk tried to explain the exponential impact of AI and robotics:

“So long as civilization keeps advancing, we will have AI and robotics at very large scale,” he said. “That’s the only thing that’s gonna solve for the US debt crisis; In 3 years or less, my guess is goods and services growth will exceed money supply growth. But it probably would cause significant deflation.”

How? Anyone who is narrowly focused on AI as a productivity gambit is truly thinking too small. The traditional framework that views software as a tool for human workers assumed that humans would remain central to workflow orchestration. Optimization meant better UIs, faster performance, and intuitive navigation. Value capture meant per-seat pricing scaled by human headcounts.

The emerging framework is built around agents as autonomous orchestrators. This hybrid world assumes humans define goals, but agents execute workflows end-to-end. Optimization means reliability across hundreds of autonomous steps, graceful failure handling, and accurate tool selection. Value capture means per-workflow pricing scaled by economic outcomes, not headcount.

In this Inference economy, agents don’t replace software applications; they replace the human orchestration between them.

Enterprises pay for outcomes: An agent processing a customer service ticket end-to-end generates revenue scaled to the outcome, not the seat.

Inference is the new labor: Every step (reasoning, tool calling, evaluating) consumes compute.

Value Capture: The platform captures the delta between the workflow value ($50 of labor) and the inference cost ($5 of compute).

This is why massive infrastructure investment doesn’t seem to make economic sense without visible software revenue today.

The potential revenue to justify this spending doesn’t come from selling better software. It comes from capturing value directly from labor markets through autonomous workflow completion, with compute revenue flowing to the infrastructure layer.

Traditional enterprise software repricing only makes sense through Orchestration Economics.

As these discontinuity pieces come into place, the market is trying to reprice companies based on their architectural fitness for autonomous orchestration vs. human-mediated usage. The market isn’t confused about software’s future: It is executing ruthlessly efficient selection based on structural fitness for the post-rupture environment. Or at least it is trying to.

Convergence to Exponential

Here are the trillion-dollar questions you want answered: How fast does this exponential world arrive? How quickly does enterprise deployment cross from 23% scaling to majority adoption? Will that be in 6 months or 10 years? Or...?

Here is the truth: Nobody knows. Sorry.

Discontinuity is not a linear process. I did warn you at the very start.

However, remember the 4 signals: infrastructure, capability, economics, and adoption.

What to watch for now is how each of these is advancing and converging. As we see with the Coding Wedge, this is where the exponential effects start to appear.

Of course, there are clues within these clues that we can track that will offer ongoing insight:

- Progress in multi-modality: Handling text, images, audio, video, and more simultaneously enables more human-like autonomy, boosting agentic systems’ ability to tackle complex enterprise tasks like financial analysis or customer service. This could accelerate AI adoption if scaled in 6-18 months via open-source advancements.

- MCP adoption: While there are no recent stats on MCP adoption, widespread MCP use could push majority adoption in 1-2 years by minimizing friction. Several indicators (and notably remote server growth) suggest that MCP could become the integration standard (MCP-driven tools for code migration, QA, and support ticket triage lead to significant cost reductions). To date, security gaps are still holding MCP back from enterprise adoption.

- Inference Scaling: A hot topic at the moment. Even as inference costs drop, everyone is trying to figure out how to optimize systems (via hardware and software) even more to eventually deploy autonomous agents on the scale needed to make the economics work. There are limitless ways to attack this problem, but figuring it out will be another step toward acceleration.

What is clear is that early adopters in software engineering are demonstrating unit economics advantages. The question is whether capability improvement pace (making agents reliable across more domains) outstrips enterprise risk tolerance evolution (willingness to deploy autonomous AI).

In the end, based on the pattern of every previous technological discontinuity, this phase will feel like it is playing out painfully slowly. And then, just as doubt peaks, it will accelerate to a blistering pace, unleashing change at a shocking rate, much faster than skeptics would have thought possible.

This will happen just as infrastructure meets capability meets economics meets adoption, and selection pressure intensifies.