The Orchestration Discontinuity: Decoding Big Tech’s $400B AI Infrastructure Bet

The capex surge announced by Meta, Google, Amazon, and Microsoft represents a premium to win the Agentic future. The 'Three Layers of AI Value Capture' is a new framework for evaluating those plans.

The hyperscalers’ $400B+ AI capex surge in 2025 isn’t about generic cloud infrastructure scaling. It is four distinct, high-stakes bets on dominating the Agentic AI Era, where autonomous agents orchestrate complex workflows. The true Discontinuity lies not in tech advancements but in economic shifts from linear, consumption-based models to exponential, network-effect platforms centered on orchestration control. This positions Big Tech for asymmetric returns as value accrues to whoever owns the coordination rails of tomorrow’s automated economy.

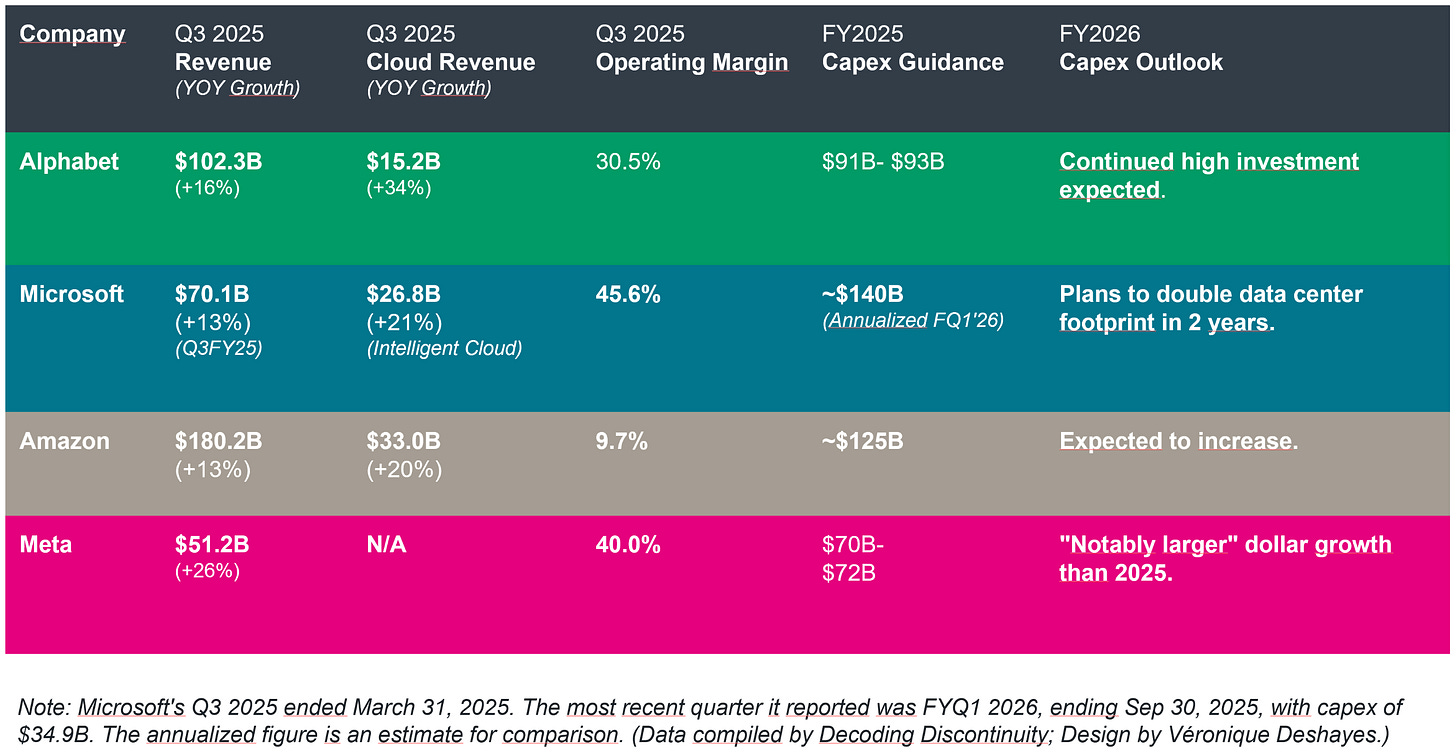

The Q3 2025 earnings season delivered (again) a string of staggering capital expenditure figures from Microsoft, Google, Amazon, and Meta. The projected $400 billion of spending in 2025 is intended to serve as the financial foundation for the durable growth moats over the next decade.

Consider the scale of this coordinated surge:

Google raised its FY2025 capex guidance to $91-$93 billion, up from $85 billion one quarter prior, and $75 billion at the year’s start.

Microsoft reported a record $34.9 billion in capital expenditures for its fiscal first quarter (Q1FY2026, $80 billion in FY2025), a 74% year-over-year increase. CEO Satya Nadella announced plans to “roughly double our total data center footprint over the next 2 years.”

Amazon guided to $125 billion in full-year 2025 capex with expectations for further increases in 2026. The company’s capex on AI infrastructure was $34.2bn in this quarter, bringing total YTD capex to $89.9bn.

Meta increased its outlook to $70-$72 billion while stating that “capital expenditures dollar growth will be notably larger in 2026 than 2025.”

Collectively, these earnings announcements reinforced the clear narrative that the AI arms race has entered an unprecedented phase of capital deployment in the tech industry. But because the numbers are so immense and these companies are lumped together, this capex blitz also obscured an important distinction:

Each hyperscaler is deploying its capital per a very distinct theory of value capture in the Agentic AI future, leveraging unique assets and accepting very different risks.

This is why it can be hard for many observers to distinguish between the various types of infrastructure investments each of these companies is making now. They are being interpreted through the current phase where AI acts as a “copilot,” enhancing human productivity and generating impressive but ultimately predictable, linear growth.

These companies are looking much further ahead with these investments, beyond the steady-state infrastructure buildout towards the bigger prize: the race for orchestration control in the agentic era. The paradigm shift will arrive with the maturation of Agentic AI over the next years, a world in which autonomous AI agents transition from tools humans operate to actors that perform complex, multi-step business processes independently.

The $400 billion represents not one bet, but four. To understand who is positioned to win, we must place ourselves in this paradigm and stop viewing the AI race as a monolithic sprint.

The discontinuity is not the technology. Agents are improving. The discontinuity is in the economics.

When value capture shifts from consumption-based infrastructure (linear scaling) to orchestration platforms (network effects), the same dollar of infrastructure investment generates much higher enterprise value.

This is not a cloud buildout to meet short-term demand. It’s an options premium being paid to achieve coordination control to win in this discontinuous Agentic AI future.

In this article, my goal is to articulate the implications of these massive infrastructure investments for each of these companies through this new lens. I will do this in two parts:

Part 1: Introduces the Three Layers of AI Value Capture. By defining and dissecting these layers, we can establish a framework for evaluating how each hyperscaler is building - or not building - a position in those layers where exponential value will accrue.

Part 2: Applies the framework to the Big 4: Meta, Amazon, Google, and Amazon.

Part 1: The Three Layers of AI Value Capture

The AI value chain is not flat. Value and defensibility are not distributed equally. I propose to frame this as three distinct layers, each with its own economic physics.

Layer 1: The Foundational Layer (Digital Infrastructure)

This is the world of compute, storage, and networking. These are the picks and shovels of the AI gold rush. It is capital-intensive and essential. Its value mechanics are unforgiving.

The business model is linear and consumption-based, akin to a utility. Revenue grows as usage grows, but it is subject to intense price competition and margin pressure over time. The numbers tell the story: while AWS revenue grew 20.2% year-over-year and Google Cloud surged 34%, these impressive figures mask the reality that infrastructure is becoming table stakes.

The primary moat here is sheer scale, with custom silicon serving as a crucial defense against commoditization:

Google’s TPUs, now in their seventh generation with “Ironwood,” have reached a maturity validated by Anthropic’s recent commitment to utilize up to 1 million TPUs in a deal worth “tens of billions.”

Amazon’s dual-pronged strategy relies on Trainium for training and Inferentia for inference, creating a “multi-billion-dollar business that grew 150% quarter over quarter,” according to CEO Andy Jassy.

Microsoft’s Maia accelerator and Cobalt CPU represent their billion-dollar bet to escape the NVIDIA tax and optimize for Copilot workloads at scale.

The scale of these investments in custom silicon is staggering. Amazon’s “Project Rainier” alone contains nearly 500,000 Trainium2 chips built specifically to train Anthropic’s next-generation Claude models.

The specificities of the agentic cloud provide another element of differentiation.

Infrastructure is necessary but not sufficient. It is the foundation upon which exponential value is built, but it rarely captures that value itself. The winners at this layer will be those who can translate infrastructure dominance into lock-in at higher layers. That translation is far from guaranteed.

Layer 2: The “Intelligence” Layer (Models & Orchestration)

This is where raw compute is transformed into reasoning and action, and where exponential value is created. The intelligence layer is not monolithic: it comprises two distinct battlegrounds with radically different value capture dynamics.

Foundation Models represent the first battleground, and here a strategic schism has emerged. Proprietary models like OpenAI’s GPT series and Google’s Gemini capture value through direct API monetization, charging per token for access to frontier capabilities. However, this approach suffers from “value leakage”: while developers can build substantial applications and businesses atop these models, the providers capture only a minor share of the overall value generated.

For example, Google’s Gemini app has already amassed over 650 million monthly active users, while Microsoft’s exclusive OpenAI partnership drives Azure AI revenue that’s growing faster than the already impressive 26% cloud growth rate. In contrast, Amazon Bedrock adopts a neutral, model-agnostic approach, hosting everything from Anthropic’s Claude to Meta’s Llama alongside their own Titan and Nova models, suggesting that model differentiation may be temporary.

Meta’s Llama strategy represents the antithesis: commoditize to dominate. By open-sourcing frontier models that now power applications for over 1.2 billion developers, Meta forces rivals to host its technology, turning their infrastructure against them. Meta captures no direct revenue but gains immense strategic leverage. Every Llama deployment trains the global developer ecosystem in Meta’s preferred frameworks, accelerating innovations that Meta can absorb into its advertising engine, where a mere 5% improvement in content recommendation drives billions in incremental revenue.

The Orchestration Mesh is the second battleground and, in my view, the ultimate prize. This is the “AI Operating System“ that enables developers to choreograph fleets of specialized agents into symphonies of automated work. The company that owns the dominant orchestration layer doesn’t just sell a tool; it owns the rails upon which the automated economy runs.

We already see very different strategies emerging to pursue this opportunity:

Google’s strategic bet here is particularly interesting. Its Vertex AI Agent Builder, coupled with the open Agent2Agent (A2A) protocol introduced in April, represents a vision of orchestration as a universal standard rather than a proprietary moat. The A2A protocol enables what researchers call an “Agent Mesh,” a communication model where agents maintain full transparency and shared access to communication history within a group, promoting more effective coordination and collaborative decision-making. This is economic architecture for an automated economy, not just “technical architecture”. Google has since gone one step further in the protocol race, with the recent launch of the Agent Payments Protocol (AP2) to spur “Agentic Commerce”.

Microsoft countered with its newly unified Agent Framework, merging the enterprise-grade Semantic Kernel SDK with the experimental AutoGen framework. This convergence provides developers with a single toolkit for everything from simple API connections to complex multi-agent systems capable of autonomous task decomposition.

Amazon’s Agents for Amazon Bedrock, built on the AgentCore platform, emphasizes reliability and AWS ecosystem integration, banking on their installed base rather than technical innovation.

From a value standpoint, platform lock-in creates switching costs measured not in dollars but in organizational transformation costs. Once an enterprise builds its mission-critical workflows on these platforms, extraction becomes virtually impossible. Every agent interaction, every workflow execution, every automated decision flows through this layer, creating both a transactional toll booth and an unparalleled data stream for continuous improvement.

Research on agentic AI frameworks reveals that current orchestration capabilities vary dramatically. Systems like AutoGen enable multi-agent conversations with shared context, while LangGraph provides state-machine orchestration with deterministic workflows. However, true interoperability remains limited - frameworks operate in silos with incompatible abstractions for agents, tasks, and memory. The company that achieves standards-level adoption will capture disproportionate value.

Layer 3: The Application & Data Flywheel Layer

The ultimate return on the hundreds of billions invested in capital expenditure will be realized at the application layer. This is where AI is leveraged to defend and enhance each company’s core, high-margin business. The application layer is not a separate, final step; it is the engine of a powerful, proprietary flywheel, serving as both the primary distribution channel for AI capabilities and the most valuable source of unique data to train future models.

For these companies, applications are not merely distribution channels for AI capabilities. They are proprietary data factories:

Google Search processes c.14 bn. queries daily, each one a signal of human intent, now enhanced by AI Overviews that have driven a >30% increase in user engagement with complex queries.

Microsoft 365 captures the entire knowledge work output of the Fortune 500, with Copilot usage doubling quarter-over-quarter.

Amazon.com records every browse, click, and purchase across a marketplace, generating $180.2 billion in quarterly revenue.

Meta’s platforms document the social graph of 3.4 billion humans, with AI-driven recommendations increasing time spent on Facebook by 5% and on Threads by 10% in Q3 alone.

This data creates a flywheel: Application usage generates proprietary data, which trains better models, which enhance the application, which attracts more users, which generates more data. It’s a virtuous cycle that compounds exponentially over time. Meta’s integration of direct Meta AI interactions into ad targeting signals represents a new dimension of this flywheel as user conversations with AI become immediate purchase intent signals.

The application layer is also where business model disruption lurks.

Google’s AI Overviews directly answer queries that once generated multiple ad clicks, yet the company maintains a 30.5% operating margin (for Q3 2025 ending September 30, 2025). Microsoft accepts near-term margin pressure from Copilot investments, betting on long-term lock-in.

The companies that win will be those that cannibalize themselves before competitors do it for them.

The Orchestration Discontinuity

The three-layer framework reveals why this capex cycle differs fundamentally from previous infrastructure buildouts. In the cloud era, infrastructure providers (AWS, Azure, GCP) captured value through consumption. Linear scaling as usage grew. The orchestration layer introduces a different dynamic entirely: winner-take-most economics through network effects.

Hence, the orchestration layer introduces a discontinuity in value capture economics. The $400 billion being deployed today represents not one bet, but four radically different theories of how value will be captured in the agentic era. Understanding these distinct positions and which strategic questions will determine the winners reveals asymmetric investment opportunities that market consensus has yet to price in.

In Part 2, I’ll apply this framing to each of the Big 4:

Google: The Orchestration Maximalist

Microsoft: The Integration Incumbent

Amazon: The Orchestration Risk

Meta: The Consumer Superintelligence Wildcard

The Next Eighteen Months

Three Questions That Determine Asymmetric Returns

Keep reading with a 7-day free trial

Subscribe to Decoding Discontinuity to keep reading this post and get 7 days of free access to the full post archives.