The Inference Economy: The Missing $800 Billion Isn't Missing

Why orchestration revenue from labor market capture will generate trillion-dollar infrastructure returns.

The AI infrastructure build-out faces a perceived $800 billion revenue gap by 2030, per Bain & Company’s 2025 Technology Report. This analysis is arithmetically sound but strategically wrong. The missing revenue will emerge from a significant economic transition: AI systems capturing value directly from the $60 trillion global labor market rather than from IT budgets. As agentic colleagues perform cognitive work, enterprises will pay for tasks and outcomes rather than software licenses. This creates “orchestration revenue” priced at multiples of underlying inference costs, with those compute costs flowing directly back to infrastructure providers. Every completed workflow generates billable compute consumption that cascades through the value chain to hyperscalers and chip manufacturers. This produces gross margins of ~60-90% for orchestration platforms, while simultaneously generating the predictable, high-margin infrastructure revenue needed to justify today’s build-out.

Bain & Company’s 2025 Technology Report identified a critical challenge: the AI industry’s infrastructure investments will require $2 trillion in annual revenue by 2030 to generate acceptable returns. Yet even with aggressive growth projections, Bain forecasts only $1.2 trillion—an $800 billion shortfall that has sparked concerns about an AI investment bubble.

Bain’s market definition draws from traditional software revenue sources, missing the emergence of an entirely new economic category. The missing revenue will not come from expanding IT budgets or incremental software sales. Instead, it will emerge from AI systems capturing value directly from the $60 trillion global labor market through what I term the “inference economy.”

When synthetic colleagues replace human workers, every workflow generates inference revenue priced per task/per outcome, covering computational costs plus value markups. The transformation won’t be smooth. Pricing models are breaking, physics constrains scaling, and the transition will prove economically violent. But the gravitational pull toward this new economy is irresistible.

The Arithmetic of Transformation

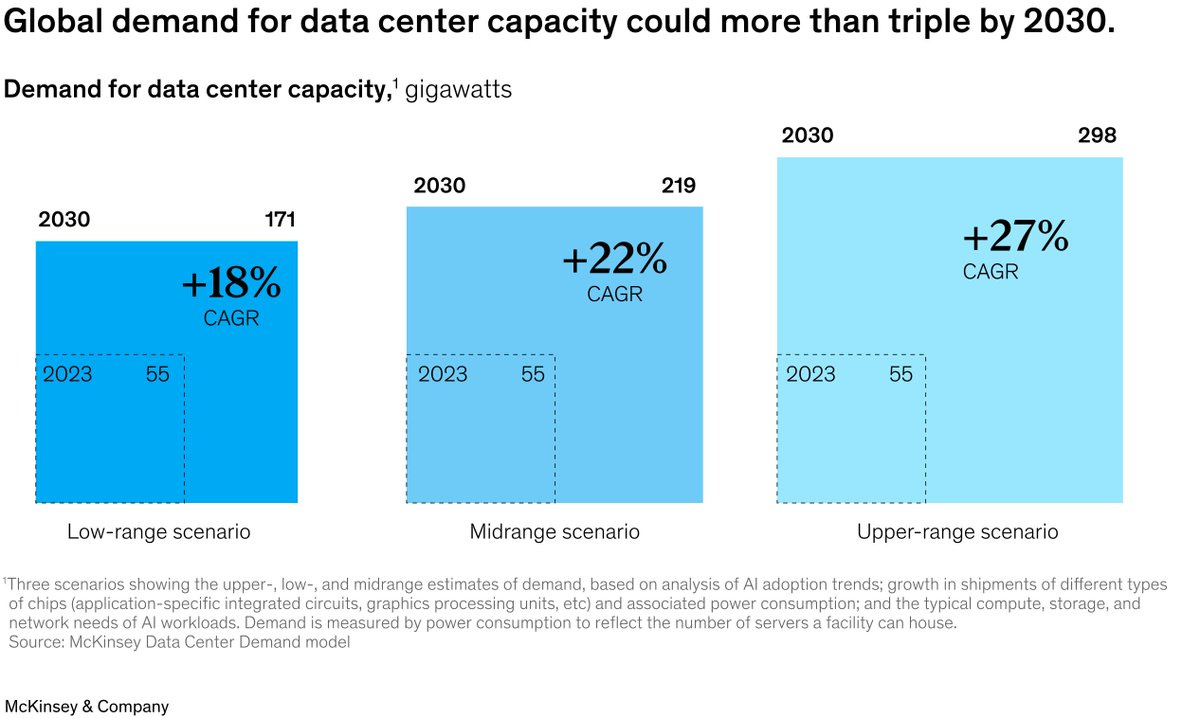

The AI industry is making an unprecedented capital allocation.

Microsoft, Google, Amazon, and Meta are collectively deploying more than $500 billion annually into AI infrastructure. This scale of investment dwarfs the combined impact of the internet boom, the mobile revolution, and cloud computing.

Bain & Company’s Technology Report 2025 crystallized the challenging economic rationale of this spending.

The revenue gap that Bain highlights has sparked legitimate concerns about an AI bubble. Critics question whether sufficient demand exists to justify such massive infrastructure investment. Yet this analysis, while arithmetically sound, fundamentally misunderstands where AI revenue will originate.

The $800 million isn’t missing. It will emerge from an entirely new economic category that doesn’t fit traditional software models: task-based coordination performed by master agents able to complete end-to-end workflows, and charge for that new labor (and outcome).

Recent economic research from Yale economist Pascual Restrepo provides the theoretical framework for understanding this transformation. In his July 2025 NBER paper titled “We Won’t be Missed: Work and Growth in the Era of AGI”, Restrepo models an AGI era where two simple premises reshape the economy:

First, AI can eventually perform all economically valuable work at a finite compute cost.

Second, computational resources expand exponentially, making growth ‘linear in compute’ rather than human-limited.

As a result, human wages converge to the ‘compute-equivalent value’ of their labor—the cost of replication—freeing up trillions from the $60 trillion global labor market for orchestration platforms and hyperscalers.

This theoretical framework directly supports the “Agentic Colleagues” thesis I have developed in my Agentic Era series. As autonomous AI systems assume the role of synthetic colleagues within enterprises, they do not merely augment human productivity—they fundamentally restructure how economic value flows through organizations. The inference economy emerges as the natural revenue model for this transformation.”

This is the emerging discontinuity that Bain’s “$800B shortfall” calculation misses.

“We’re not building data centers. We’re building the factories for the inference economy. The $2 trillion isn’t the opportunity. It’s the foundation for the agentic AI discontinuity."

The Inference Discontinuity

Nvidia CEO Jensen Huang’s recent declaration on the latest BG2 episode that inference compute will increase by “billions” of times sounds like hyperbole. It’s not. It’s a mathematical reality emerging from how work gets orchestrated in an AI economy.

Consider what happens when AI systems coordinate workflows. Every customer interaction triggers dozens of API calls across multiple models. Every financial analysis spawns hundreds of agent interactions. Every strategic decision triggers a cascade of inference requests. A single complex workflow, such as preparing a comprehensive market analysis, might require 100 API calls, processing a million tokens, and generating $50-100 in inference costs.

With value-based pricing at 5x-10x computational costs, that single analysis creates $500-$1,000 in revenue while delivering 80% savings versus human consultants.

Economic theory now validates this scaling. Restrepo’s analysis shows that once AI can perform all “bottleneck work”—tasks essential for economic growth—output becomes “linear in compute.”

We’re witnessing what Anthropic CEO Dario Amodei aptly described in his October 2024 essay “Machines of Loving Grace” as “a country of geniuses in a data center,” an economy where compute transforms directly into productive work. “I think that most people are underestimating just how radical the upside of AI could be,” he wrote just last year.

In practice, every orchestrated workflow captures value previously locked in human bottlenecks. As these workflows scale from thousands to billions of transactions daily across the global economy, they generate exponential inference growth that validates Huang’s prediction.

This creates the inference economy.

Every business process, every decision, every piece of analysis triggers compute consumption. The hyperscalers aren’t speculating; they’re preparing for an economy that runs on inference.

Beyond Software: The Labor Market Disruption

The fundamental shift from software economics to labor market economics represents the critical insight that traditional market analyses overlook. AI systems no longer compete solely for IT budgets. They compete for the far larger allocation to human labor.

Traditional software economics operate within narrow constraints. The global software market totals approximately $700 billion, representing just 2-5% of enterprise spending. Software improves productivity by 10-30%, justifying modest per-seat pricing. The addressable market remains fundamentally limited by IT budgets.

The emerging orchestration economy obliterates these constraints. When AI agents function as synthetic colleagues, these autonomous systems complete entire workflows. They don’t enhance productivity; they replace entire functions, capturing value from wages, benefits, and operational costs that represent 40-60% of enterprise spending.

As I noted previously this month when discussing the AI bubble, “The real feast is agentic AI’s potential to spawn entirely new revenue streams and unlock growth by cracking open the $60 trillion global labor market.”

As economic modeling demonstrates, we’re approaching a world where human wages converge toward what economists call the “compute-equivalent value” of labor. This is the computational resources required to replicate their work. This creates a new pricing paradigm where companies pay for outcomes based on the economic value of work performed, not tool access.

Consider the patterns emerging across industries.

Customer service systems handle interactions at $2 versus $25 human costs. Financial analysis platforms produce research at $500 per report, compared to $10,000 in analyst fees. Development systems deliver features at $2,000 versus $40,000 developer costs.

Each service represents revenue flowing not from IT budgets, but from operational expenses.

In an interview on The Information’s TITV this week, Hemant Taneja, CEO of venture firm General Catalyst, discussed the Bain study and also echoed the idea that any analysis that examined only IT budgets was inherently limited.

“The equation becomes hard if you think about the market size in the context of software budgets of companies,” he said. “But if you start to think about the fact that the ultimate revenue opportunities are labor budgets of these companies, that’s when it really starts to make sense.”

The Architecture of Value Creation

This transformation stems from a fundamental shift in the architecture of how AI systems operate. Modern agentic platforms don’t provide tools for humans to use; they orchestrate networks of specialized agents that complete work autonomously within a given environment and with access to the right tools.

A revenue operations workflow illustrates the distinction.

Traditional approaches require hours of sequential human work, from identifying leads to qualifying opportunities, preparing proposals, and securing approvals. This could consume $500 in labor costs.

An orchestrated AI system performs all tasks simultaneously. Identification agents process thousands of signals in parallel, qualification agents evaluate all leads concurrently, proposal agents generate customized documents instantly, while an orchestration layer coordinates everything in real-time.

Total cost: $10 in inference compute. Coverage: fifty times greater. Completion: near instant.

The economic disruption here extends beyond cost reduction. Revenue now scales with the work performed, not the number of seats purchased. This creates what I term “orchestration revenue,” defined as value captured from completed workflows rather than software licenses.

Though nascent, we could envision the formula to be:

Orchestration Revenue = Task Value × Inference Cost Multiple × VolumeTask value represents economic worth.

The inference cost multiple (typically 2x-20x) reflects value-based pricing above computational costs.

Volume scales with deployment breadth.

Consider the unit economics. A platform orchestrating 100,000 workflows monthly at $100 average value with 10x markup on $10 inference costs generates $10 million monthly while consuming $1 million in compute. The result is 90% gross margins with perfect scaling.

The emergence of inference costs as a primary cost-of-goods-sold (COGS) factor is the result of the above. In traditional SaaS, COGS remain minimal—typically 10-20% of revenue consisting of hosting and support. In the inference economy, computational costs become the dominant expense, potentially representing 10-40% of revenue depending on value capture efficiency.

This transforms optimization of inference costs into a critical competitive advantage. This explains the rapid ascent of inference-optimization platforms like Together.ai, which delivers substantially lower inference costs through architectural innovations in model serving and routing. As orchestration platforms scale, their ability to minimize inference costs per outcome becomes a critical determinant of market position.

The Turbulent Transition

The path to this new economy won’t follow the smooth exponential curves that dominate technology presentations. It will be jagged, uncertain, and economically violent.

Salesforce’s experience with Agentforce reveals the complexity.

CEO Marc Benioff discovered that switching from seat-based pricing to outcome-based models isn’t just a billing change; it's a fundamental shift in how companies operate. It’s organizational chaos. How do you price an AI agent that handles unlimited customer conversations? Per interaction? Per resolution? Per month? The market hasn’t developed standards, and early attempts are stumbling. Companies desperately want AI benefits, but their procurement departments literally lack budget codes for “synthetic employees.”

Restrepo’s research illuminates another challenge: the transition creates dramatic wage disparities.

Some workers will see wages temporarily spike above their “compute-equivalent value”, earning exceptional returns simply because their particular work happens to be among the last successfully automated. Meanwhile, other colleagues will get displaced overnight when startups crack their workflows. It’s economic musical chairs where the music stops randomly and without warning.

Constraints of the physical world add another layer of complexity.

The “atoms meet bits” problem is real. Every GPU requires rare earth minerals. Every data center demands power and cooling. Taiwan manufactures the critical chips, and geopolitics makes everyone nervous. Compute growth requires building data centers indefinitely, maintaining cheap energy, and pushing physics beyond current limits.

Meanwhile, Moore’s Law shows signs of exhaustion, even as our economic models assume its continuation.

The Four-Way Value Distribution

Despite the chaos, the economics prove so compelling that adoption is bound to accelerate. The model has the potential to create extraordinary value for four constituencies:

Enterprises achieve 70-90% cost reduction versus human alternatives while accessing previously impossible capabilities such as personalization at population scale, continuous analysis across all data, perfect consistency, and availability. Large companies will willingly pay 5-10x inference costs because they’re saving 10-20x total costs.

Orchestration platforms capture 60-90% gross margins by marking up inference costs based on value delivered. Unlike traditional software with massive development costs and slow deployment, these platforms scale instantly with usage. Every workflow completed strengthens their position through accumulated context and refined coordination patterns. (See more from me here, here, and here on how the Orchestration Layer is key to capturing value).

Hyperscalers and model providers receive continuous, high-margin inference revenue that scales with economic activity itself. Unlike training workloads that spike and decline, inference generates predictable, growing revenue streams. Current inference markets of $2-5 billion annually could reach $500 billion-$1 trillion by 2030. That represents pure profit for infrastructure already being built

And, of course, there’s Nvidia. In this view, they, along with other infrastructure providers in the AI economy, are the ones sitting as the true chokepoint of value creation.

The Economic Endgame

The mathematics of the transformation to the Inference Economy are unforgiving. Economic modeling shows that as computational resources expand, the share of labor in the GDP will converge to zero, while compute captures essentially all economic value.

This isn’t because human work disappears but because its value becomes capped at computational replacement costs while the economy continues expanding through compute scaling.

In other words, as computers get more powerful, a bigger share of total economic value will come from machines, not people.

It’s not that humans stop working or contributing, but that the maximum value of human work is limited by what a machine could do for the same task. Meanwhile, compute power can keep scaling up almost without limit.

The scale differential is stark: human cognitive capacity operates at approximately 10^16 to 10^18 floating point operations per second, while projected computational resources could reach 10^54 flops—a difference of more than 30 orders of magnitude!

As Restrepo warns, we “won’t be missed” economically. But this massive transfer creates the revenue streams justifies today’s infrastructure investments, potentially accelerating growth to 20-30% annually if AGI automates science.

Economic theory confirms the transformation ahead. As more essential work becomes automatable, growth becomes entirely driven by computational expansion, with output scaling linearly with compute resources. Every additional data center, every new GPU cluster, every inference optimization directly translates into economic capacity. The economy stops being constrained by human limitations and starts scaling with silicon.

The build-out becomes essential for the economic transformation ahead, one where compute becomes the primary factor of production.

For enterprises, then, the question isn’t whether to deploy AI, but how quickly to restructure around synthetic colleagues. The competitive advantage isn’t incremental but exponential. Amid such questions of backlash as the one raised by the MIT study this summer about the high rate of failed GenAI enterprise pilot projects, the reality is that delay means competing against organizations with unlimited cognitive resources.

This is the discontinuity that must be understood to grasp the scale of upheaval coming to all economic assumptions.

The missing $800 billion for all of this infrastructure spending isn’t missing. This revenue (and far, far, far, more!) will emerge from the orchestration capability enabled by this inference economy.

Between now and 2030, as synthetic colleagues perform increasing portions of cognitive labor, they will transform how value gets created in the economy.