Two Tales of Compute: Decoding AI Infrastructure’s New Economics (Part 1)

AI's compute demands are driving a precarious $7TN infrastructure buildout. In Part 1, I examine the context and the soaring capital expenditures for training. Tomorrow: Operating costs for inference.

Oracle's stock surged 36% after disclosing a staggering $455B backlog that included an OpenAI agreement to buy $300B in computing power. The stock’s largest one-day jump in 30 years added $244 billion in market cap. This might feel like market exuberance, but in fact, it is recognition that AI has broken Moore's Law. Where compute once got cheaper every two years, AI now demands exponentially more compute for linear improvements, with GPT-5 training alone costing an estimated $500M and compute training cost projections of 10x for next SOTA models per industry experts. The result is a bifurcated market where training compute is oligopolistic, while inference compute becomes the recurring cost battleground. The entire $7 trillion infrastructure buildout rests on one fragile assumption: that OpenAI and a handful of labs keep getting funded. If that funding stops, Oracle's backlog evaporates, and the AI bubble bursts.

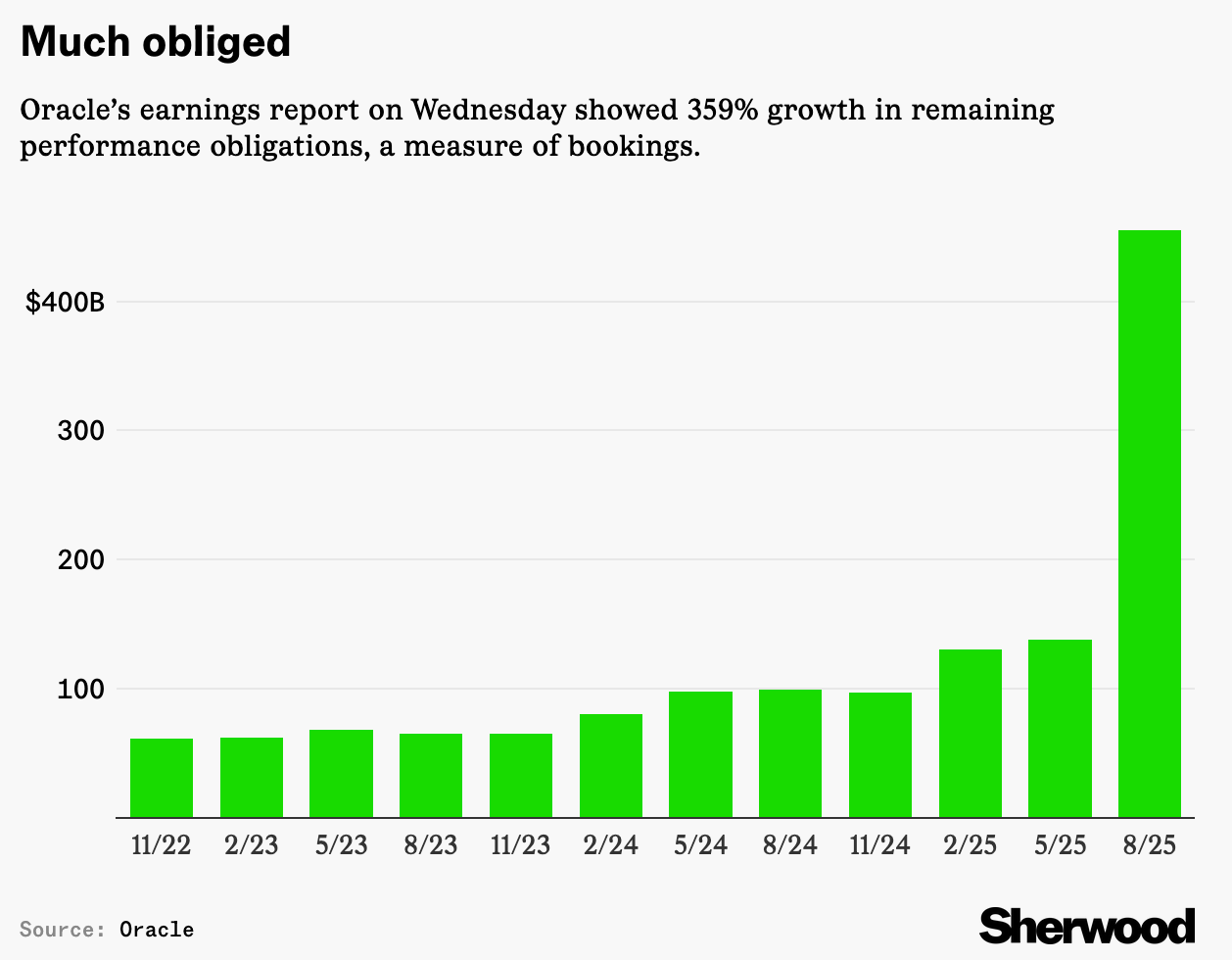

On September 10, 2025, Oracle Corporation became $244 billion more valuable in a single trading session.

The catalyst wasn't a breakthrough product or strategic acquisition. It was a number so large that seasoned analysts struggled to contextualize it: a $455 billion backlog for compute demand, up 359% year-over-year! The stock's 36% surge, its best day since 1992, came after OpenAI agreed to buy $300 billion in computing power from the company, a deal that validates what insiders have known for months: compute infrastructure has become AI's existential bottleneck.

As I wrote this summer, compute scarcity is reshaping the future of AI. Almost two months after my analysis of compute constraints as a potential single point of failure, Oracle's historic surge, and Qwen3-Next's efficiency breakthrough demand a deeper examination of AI's extraordinary infrastructure investments.

The momentum shows no signs of slowing.

Last week, Bloomberg reported that Oracle is in talks with Meta for a potential $20 billion AI cloud computing deal, adding to existing multi-billion-dollar commitments from OpenAI, xAI, and Google. The numbers are so massive, even the CEOs appear to have trouble keeping track. Meta CEO Mark Zuckerberg, fielding an unexpected question at a recent dinner with President Trump, said he planned to spend "at least $600 billion through 2028 in the US,” though admitting later that he was subsequently caught by surprise, suggesting he just made up a random number. Meta would have to dramatically ramp up its AI spending to hit $600 billion in the next three years!

No matter. The message is the same. In the age of AI, access to compute infrastructure is existential.

Hyperscalers and AI labs are accelerating ahead, and also creating an interdependency that is not without systemic risks. What truly defines today's compute landscape is the intricate web of dependencies between AI labs, NVIDIA, and cloud providers. Yesterday’s announcements underscore this fragility. NVIDIA's $100B investment in OpenAI ties chip supply directly to lab funding, while its $6.3B guarantee for CoreWeave's unused capacity ensures overflow compute but highlights overbuild risks if demand softens.

OpenAI cannot function without Microsoft's Azure infrastructure and NVIDIA's H100 clusters. Anthropic depends on AWS's custom Trainium chips and compute capacity. Even supposedly independent players like xAI must partner with Oracle and CoreWeave while spending billions building their own infrastructure.

This interdependence creates a precarious equilibrium: If any part of this intricate balance of partnerships buckles, the size of the economic collapse could be massive.

And yet, none of them can afford to be cautious. Waiting means falling irrecoverably behind.

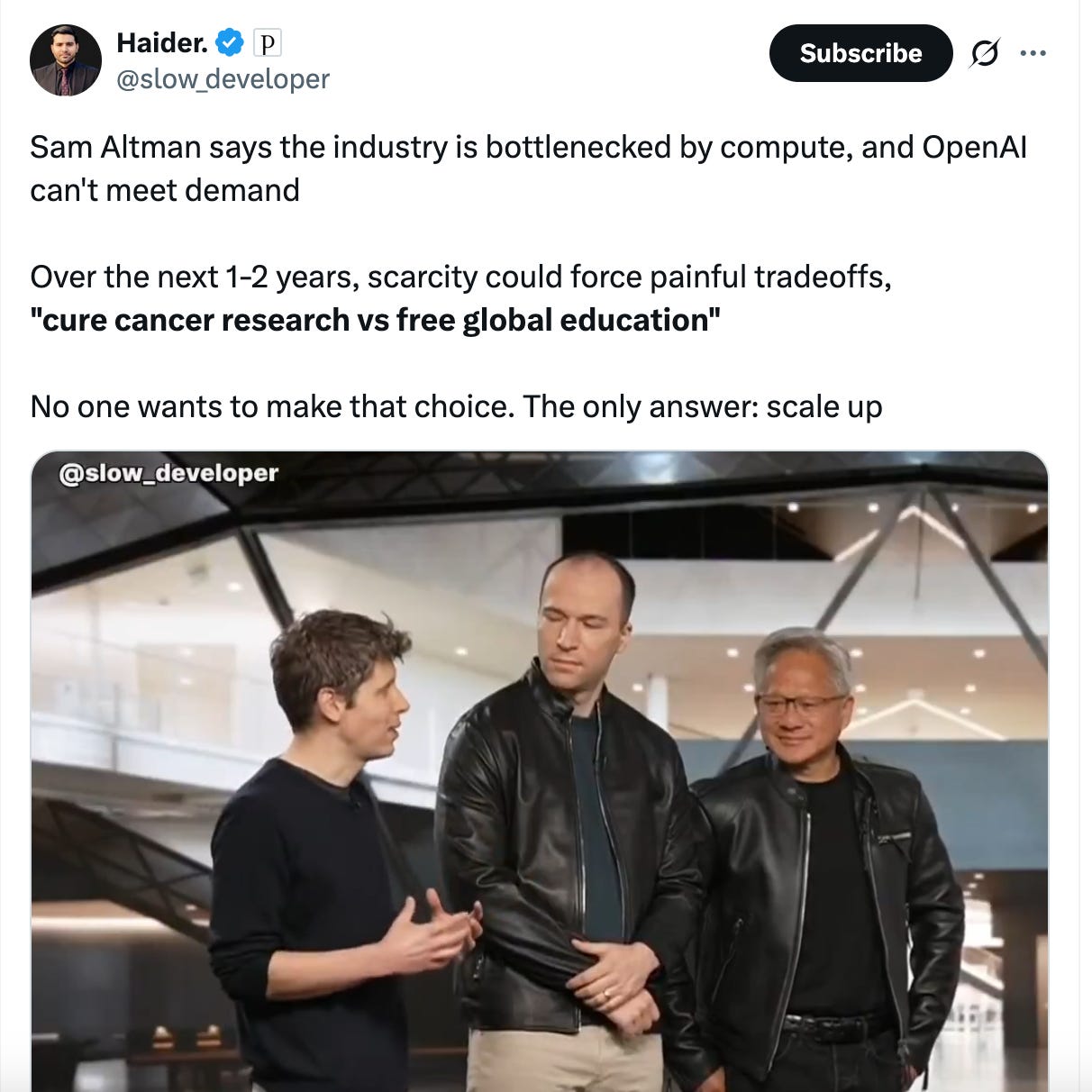

This is the discontinuity we must decode: Compute has become the bottleneck and the arbiter of who wins in AI. Not algorithms, not data, not even talent—though all matter—but raw, specialized, massively scaled computational power. The implications ripple through every layer of the stack, from the oligopolistic dynamics of training clusters to the knife-edge economics of inference at scale.

The End of Moore’s Law Era: The AI Revolution's Physics and Economics

The AI revolution operates under different physics and economics compared to traditional Moore's Law-driven progress.

For nearly six decades, Gordon Moore's observation governed the technology industry's cadence. Moore's Law, articulated in 1965 when Moore was director of research at Fairchild Semiconductor, predicted that the number of transistors on a microchip would double approximately every two years while the cost would halve. This became the industry's metronome, the assumption underlying every business plan, every venture investment, every strategic roadmap. Compute would get exponentially cheaper and more powerful as reliably as the seasons change.

Every major technology wave of the past half-century rode on Moore's Law's reliable cadence: costs would fall, capabilities would rise, and innovation would naturally follow. This slowed in recent years, with transistor density improvements slowing to perhaps 20-25% annually. While this was a far cry from Moore's predicted doubling, the overall capacity/price ratio continued to move just enough in the right direction to improve the underlying economics.

Today's AI revolution operates under fundamentally different physics and economics.

First, there is no guarantee that increased demand for compute can be met with increased supply. As AI compute demand has exploded by orders of magnitude, the rush to build the necessary infrastructure involved complex projects with timelines of years.

Next, the transformer paradigm, with its empirically validated scaling laws, has revealed that model performance scales predictably with compute, but at escalating costs, turning AI progress into a capital-intensive race rather than a predictable, cost-reducing evolution.

Operating costs are now at levels that would have seemed fantastical just five years ago:

GPT-5's training alone is estimated to have consumed over $500 million in compute resources

Frontier models from Anthropic and Google approach similar magnitudes

DeepMind's Gemini Ultra reportedly required $191 million for a single training run, with multiple runs needed for hyperparameter optimization

This goes deeper than raw costs or dependencies.

In the Moore's Law era, waiting two years meant getting the same capability at half the price. In the transformer era, waiting means falling irrecoverably behind.

OpenAI's GPT-4 required an estimated 10,000 times more compute than GPT-2, released just three years earlier. The next SOTA model is expected to require 10x more compute.

This isn't exponential improvement at declining costs.

It's exponential costs for linear improvements in capability.

The Chinchilla scaling laws, validated across dozens of models, show that optimal performance requires balanced scaling of both parameters and training data, which means compute requirements grow faster than model size alone would suggest.

To understand this discontinuity, we must examine two distinct but interrelated compute realities: training and inference.

Part 1: Training Compute – The Frontier Bottleneck

Training compute represents the foundational layer of AI's new economics: massive, synchronized GPU clusters operating in perfect orchestration to birth intelligence from data.

Consider the scale of recent training runs: Analysts and researchers have estimated that training GPT-5 required approximately 25,000 NVIDIA H100 GPUs operating continuously for three to six months, consuming enough electricity to power a small city of 50,000 residents. Researchers at the University of Rhode Island’s AI lab calculated that GPT-5’s average daily energy consumption may be as much as 1.5 million U.S. homes.

The computational intensity stems from the transformer architecture's requirement to process every token in relation to every other token, creating quadratic complexity that explodes with model size and context length.

The physical infrastructure required staggers comprehension.

A single training run for a frontier model requires data centers with 100+ MW of power capacity, specialized cooling systems capable of dissipating 40 kilowatts per rack, and networking infrastructure with 3.2 Tbps of bisection bandwidth to prevent communication from becoming the bottleneck. However, leading-edge racks now hit 130-250 kW (up to 900 kW projected). The synchronization requirements are absolute—a single GPU failure can corrupt an entire training run, wasting weeks of computation worth millions of dollars.

This explains why OpenAI reportedly maintains hot spare clusters, burning millions monthly in idle capacity to ensure training continuity.

The financial implications are huge.

Training compute has become by far the largest line item on any frontier AI company's profit and loss statement, akin to Sales & Marketing for high-growth SaaS. The concept of compute "payback"—how quickly a model's revenue can recoup its training costs —is what I view perhaps as the most critical metric for AI economics when assessing the “durable growth moat” of an LLM.

Compute Payback calculates as LTM R&D costs divided by LTM gross profit. Payback periods for leading LLMs are compressing and follow an inverse exponential curve with a ratio of 12-15x /1x between now and 2030, showing accelerating returns on compute investments.This reality has crystallized into an oligopolistic market structure that would make Standard Oil blush.

The recent revelation that Microsoft has committed $100 billion to AI infrastructure through 2027 includes a single data center in Wisconsin, which is supposed to be the “world’s most powerful AI datacenter” per Microsoft. It is one of two data centers Microsoft is building in the state that will require a projected total of 3.9 gigawatts of power, which would be enough for 4.3 million homes.

In a state with 2.82 million homes, that is extraordinary. And it illustrates the capital moats emerging.

Anthropic's arrangement with AWS provides not just compute, but co-engineering of custom Trainium chips that reduce training costs by 40% compared to GPUs. Google's TPU advantage allows it to train models at dramatically lower costs than GPU-based alternatives, while xAI's decision to build its own 100,000-GPU cluster in Memphis—dubbed "Colossus"—represents a $10 billion bet on vertical integration that even Elon Musk called "the most powerful training cluster in the world."

The role of neoclouds like CoreWeave, or Crusoe as it rolls out its digital infrastructure business, deserves special attention here. Crusoe has expertly exploited the current scarcity, offering GPU access at premium prices to those locked out of hyperscaler partnerships or simply because hyperscalers could not serve due to technical and capacity reasons.

Yet the window could be closing, at least on training, as LLM providers race to internalize training capacity. Microsoft's announcement of a 1.4-gigawatt data center in Wisconsin, Google's multi-gigawatt commitments to carbon-free energy by 2030, and Amazon's nuclear power deals with Dominion Energy all point to the same conclusion: training compute is too strategic to outsource.

The agentic revolution adds another layer of complexity to training dynamics.

As we explored in our Memento thesis, the potential shift toward memory-augmented architectures fundamentally alters post-training requirements. There is some speculation that post-training techniques—reinforcement learning from human feedback, constitutional AI, iterative refinement—may now consume as much compute as pre-training. GPT-4's post-training reportedly required six additional months after base model completion, doubling the total compute budget.

Models must be trained not just to predict the next token but to reason through multi-step plans, maintain coherent world models, and adapt to novel situations. This expansion of the training envelope from months to potentially years of continuous learning represents a step-function increase in compute requirements that current infrastructure can barely support.

The recent advances in test-time compute optimization, where models like o1 use additional inference-time computation to improve responses, blur the line between training and inference, creating new compute bottlenecks we're only beginning to understand. This could increase effective compute needs by 2-5x for reasoning-heavy tasks.

Coming Wednesday

Tomorrow I’ll publish the second part of “Two Tales of Compute.” This edition will dive into the economics of inference spending as well as the moves the major players are making to try to disentangle each other from their risky co-dependencies.

Here’s a preview:

Part 2: Inference Compute – The Economics of Usage

If training compute is the “capex” of AI, inference compute is its operational lifeblood.

Every query processed, every agentic action executed, every token generated represents an ongoing “opex” that compounds relentlessly. The economics here operate under different physics than training: while training happens once, inference happens billions of times daily, transforming the cost structure from a one-time capital investment to a perpetual operational burden that defines unit economics.

The mathematics of inference reveal why efficiency has become existential. (…)

I'm still having a hard time comprehending the staggering amounts of money and the scope. But this just made my jaw drop:

"The recent revelation that Microsoft has committed $100 billion to AI infrastructure through 2027 includes a single data center in Wisconsin, which is supposed to be the 'world’s most powerful AI datacenter,' per Microsoft. It is one of two data centers Microsoft is building in the state that will require a projected total of 3.9 gigawatts of power, which would be enough for 4.3 million homes. In a state with 2.82 million homes, that is extraordinary. And it illustrates the capital moats emerging."