Reading the Tea Leaves: What AI Capital Intensity Actually Reveals

As AI capex soars, the real signal isn't how much firms spend but what they buy. I map out 3 scenarios for AI compute by 2027: inference dominance, training bifurcation, or a post-transformer reset.

Amid the “Bubble” and “Depreciation” debates, current financial disclosures fall short. Companies lump “AI Capex” together as if all infrastructure is equivalent - it’s not. Training hardware faces rapid 18-month obsolescence, while inference setups can deliver returns for 4-5 years. Without better transparency on workload mix, customer concentration, custom silicon, and utilization, investors are navigating $400B+ decisions with incomplete data. Those who adapt will thrive; missteps could lead to major write-downs or worse. By 2027, the divide will be clear.

Discussing the relentless compute demands driving AI’s frontier models, Anthropic CEO Dario Amodei said last week at the New York Times’ 2025 DealBook Summit that established scaling laws are here to stay.

“You put more data into AI with small modifications...And the thing that is most striking about all of it is, as you train these models in this very simple way, with a few simple modifications, they get better and better at every task under the sun. They get better at coding, they get better at doing science. They get better at biomedicine, they get better at the law, they get better at finance...That trend is going to slow down for sure, but it’s still going to be really fast. And so, I have this confidence that eventually, the economic value is going to be there.”

Even so, he acknowledged the high-wire financial planning involved when projecting the technological demands against the long-term Capex needed to build compute. Spend too little, and a big company like Anthropic or OpenAI risks not being able to serve customers in 2 or 3 years. Overspend, and the companies face revenue shortfalls that in extreme cases could threaten bankruptcy or tempt them to adopt a “YOLO” mindset and “turn that dial pretty far” in terms of rolling out products to drive usage despite safety risks.

“When the timing of the economic value is uncertain, there’s an inherent risk of under-reacting or over-extension,” he said. “And because the companies are competing with each other, there’s a lot of pressure to push things.”

Amodei spoke just days after the latest reports that Anthropic had taken steps to prepare for a 2026 IPO that could value the company at $350 billion. And just three months after raising a funding round at a $183 billion valuation.

This is what $400 billion in infrastructure investment looks like in 2025: a high-stakes game of Russian roulette where all the chambers are loaded, but some contain blanks and some contain IPOs.

I’m not going to rehash the various arguments for and against the AI bubble, and I’ve shared my own analysis on how to think about that elsewhere. I have also touched on aspects of the Capex investments by the hyperscalers, as well as the implications of Google’s Gemini 3 breakthrough and the economic implications of the full-stack provider in terms of competition with both large model builders, such as OpenAI and the leading GPU provider, Nvidia.

In this case, I want to drill down specifically into the balance sheet questions surrounding this extraordinary infrastructure spending and those technical questions about its durability because it’s clear that anxiety around this continues to spook the markets.

As I noted last week, for the first time since the 2008 financial crisis, fund managers already believe tech companies are over-investing. Those worries got amplified thanks to a dose of financial celebrity intervention in the form of Michael Burry entering the scene by arguing that the big companies buying all those Nvidia chips should be depreciating on much faster timelines.

These concerns are not exactly new. In March 2025, I highlighted in my teardown of CoreWeave’s S-1 its questionable depreciation schedules (6-year GPU useful life versus 18-month technological cycles), and $8 billion in debt collateralized by rapidly depreciating training infrastructure as a potential red flag for its IPO. The core issue: “The mismatch between asset lifecycles and debt terms represents an under-appreciated risk.”

Still, if Burry is right, not only would that mean these companies should be reporting much higher annual expenses, but more practically, it implies that this infrastructure being put into the ground does not have a long shelf life. What might otherwise sound like an esoteric accounting dispute managed to get unusually large headlines because Nvidia felt compelled to respond to Burry by writing an extensive memo to sell-side analysts, refuting his analysis, which came amid the Google Gemini 3 release that was already putting pressure on its stock.

Putting aside the public relations drama, that still leaves investors facing a fundamental puzzle they must solve: Are these companies building durable infrastructure that generates returns for 5+ years, or accumulating stranded assets that will require write-downs when workloads shift, customers internalize capacity, or technological paradigms change?

The answer is paramount for the companies and their investors, of course. But it also matters more broadly. The resources being poured into this building frenzy are already stretching supply chains and source materials thin, not to mention power grids and natural resources. It’s not clear that the core infrastructure could be replaced at scale every two to three years, either physically or financially. Valuation bubble or no, investments and expansion could hit a wall if this infrastructure has a short life cycle.

Before it comes to that, we need to know whether this AI infrastructure investment is rational. To do that, the key distinction we need to make is understanding what the money is buying. For example, training-heavy GPU clusters depreciate fast and serve a tiny, concentrated customer base, while inference-focused, custom-silicon infrastructure is more flexible, lasts longer, and serves a broadening market. So, the same capex ratio can signal strength or risk depending on the mix.

The problem is that the headline Capex spending numbers don’t tell investors whether this is smart long-lived infrastructure or a setup for future write-downs. The aggregate metrics that most companies disclose reveal almost nothing about underlying long-term fitness. Some infrastructure investments align with long-term workload evolution and customer needs. Others represent speculative bets on transient market conditions that may not persist beyond initial contract terms.

Understanding the Capex Explosion

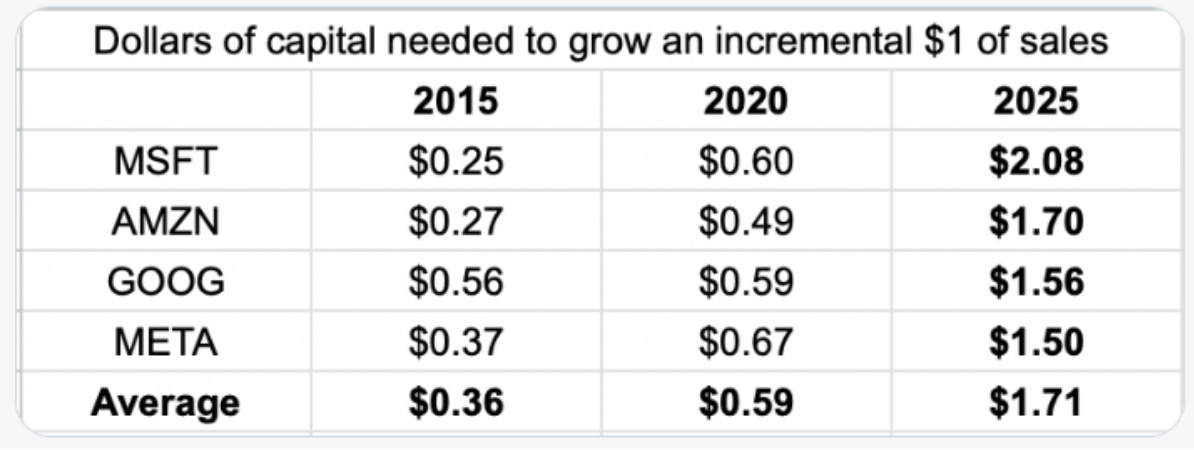

The five-fold increase in capital intensity from 2015 to 2025 reflects a fundamental shift in what these companies are building.

Figure 1 - $ of capital needed to produce $1 of sales (source: https://x.com/JohnHuber72)

Cloud computing infrastructure from 2015 supported relatively predictable workloads, such as web applications, databases, storage, and compute instances, with well-understood utilization patterns and multi-year lifecycles. AI infrastructure serves workloads with radically different characteristics.

The training burden drove the initial spike. From 2023 to 2024, the dominant narrative was frontier model training. It remains essentially the case today. Each generation of models requires exponentially more compute than predecessors. Training runs that cost $10 million in 2022 cost $100 million by 2024. The race for model capabilities continues to drive purchases of hundreds of thousands of H100 GPUs at peak prices.

Training infrastructure has distinct economic characteristics that explain capital intensity:

Short technological cycles. Training GPUs become obsolete within 18-24 months as newer generations are deployed. The capital deployed generates returns across 18-month windows before requiring refresh, rather than the traditional 5-year cloud infrastructure lifecycles.

Dense clustering requirements. Training frontier models require homogeneous clusters of cutting-edge GPUs with high-bandwidth interconnects. You can’t mix GPU generations or repurpose last-generation training infrastructure for new workloads without massive retrofitting.

Latency-insensitive - can batch and schedule for maximum throughput.

Customer concentration. The number of organizations training frontier models globally is perhaps 15-20. When you build dedicated infrastructure for specific AI labs, your capex-to-revenue ratio reflects low utilization during buildout and concentration risk as those customers potentially internalize capacity.

This creates tension between accounting conventions and economic reality. When companies extend useful life assumptions from 4-5 years to 6 years for infrastructure that becomes technologically obsolete in 18 months, reported profits overstate economic earnings.

Critics argue this amounts to systematic earnings manipulation across the sector. However, this critique assumes all AI infrastructure faces identical obsolescence patterns. It doesn’t.

Training GPUs purchased in 2023 for frontier model development may indeed be economically worthless by 2025 as customers shift to newer generations or internalize capacity. (Side note here: While Nvidia’s full memo to sell-side analysts has not been made public, one leaked excerpt includes a statement from the company: “Older GPUs such as A100s (released in 2020) continue to run at high utilization and generate strong contribution margins, retaining meaningful economic value well beyond the 2-3 years claimed by some commentators.“

But when it comes to compute, it is essential to differentiate between training versus inference. (See my Tales of Two Compute: Training Part I & Inference Part II)

Inference accelerators deployed in 2021 for serving existing models can generate profitable returns through 2026 if they maintain cost advantages over alternatives.

The distinction matters enormously for evaluating depreciation assumptions.

Inference infrastructure has different characteristics:

Longer useful lives. Inference GPUs or custom accelerators remain productive for 4-5 years. The compute requirements for serving a model don’t change when newer GPU generations are released. Companies can amortize capex across traditional cloud infrastructure timelines.

Distributed deployment. Inference scales horizontally across heterogeneous infrastructure. You can mix GPU generations, deploy custom accelerators, and optimize for cost per token rather than raw performance. The capital is more flexible.

Latency-sensitive - must respond to individual user requests within strict SLOs

Customer diversification. Every enterprise application eventually needs inference. The potential customer base is millions of businesses, not 15-20 AI labs. Risk is distributed across industries and use cases.

But inference infrastructure still requires massive capital. The total compute required to serve millions of users querying thousands of specialized models exceeds the compute required to train those models. The capital intensity doesn’t disappear. It shifts from concentrated training clusters to distributed inference fabrics.

Will this AI capex intensity continue? Three scenarios for how this plays out

The scenarios below examine how infrastructure economics evolve based on workload trajectories, using 2027 as a reference point. All three scenarios share the same technical constraint: infrastructure optimized for training performs poorly for inference. They differ in how quickly this matters and who adapts successfully.

Scenario One: Inference Dominance (70% probability)

Training represents less than 20% of total AI compute demand by 2027. Model proliferation favors thousands of specialized models (and many of them open-source) over a handful of closed frontier systems. Enterprise adoption prioritizes inference APIs, not training clusters.

In this timeline - the most likely one - training becomes a boutique activity. Fifteen to twenty elite labs building god-models. Everyone else runs inference.

The shift happens faster than expected. Training efficiency improvements, emerging from work showing that sophisticated reasoning behaviors develop through RL against verifiable answers rather than massive human-annotated datasets. This reduces the compute required for frontier performance. Model proliferation accelerates the transition. Instead of a handful of frontier models requiring massive training clusters, the market fragments into thousands of specialized models fine-tuned for specific domains.

In this scenario, the distinction between training infrastructure and inference infrastructure becomes absolute. Training requires dense, homogeneous clusters of cutting-edge GPUs optimized for batch processing. Inference requires distributed, heterogeneous deployments of cost-optimized accelerators optimized for latency, memory bandwidth, and power efficiency.

The moats that matter in this scenario:

Custom silicon becomes the primary competitive advantage. Custom inference optimized chips achieve 40-60% better cost per token. Over 4-5 year infrastructure lifecycles, this compounds into structural advantages. Google’s TPU investments from 2018 onward, AWS’s Inferentia/Trainium development, and Meta’s MTIA deployment all reflect early recognition of this dynamic. By 2027, companies without custom silicon face permanent cost disadvantages.

Architectural lock-in creates switching costs. When models train on specific silicon, they optimize for that silicon’s characteristics. The model architecture co-evolves with the hardware. Inference then runs optimally on that same silicon. This is technical interdependence. Anthropic’s dual relationship with Google (TPU for inference) and AWS (Trainium for training) exemplifies how customers choose providers based on architectural fit, not just price. Switching costs become prohibitive through technical reality, not legal restrictions.

Base utilization determines metabolic efficiency. Companies with internal workloads guarantee baseline utilization regardless of external customer demand. Google’s Search, YouTube, Gmail, and Maps all run on inference. Meta’s recommendations and ads require constant inference. These internal workloads provide 80-90% base utilization, meaning external customer revenue flows directly to profit after covering marginal costs. Companies dependent entirely on external customers (CoreWeave, Oracle Cloud Infrastructure) face utilization risk when market conditions shift.

Depreciation schedules separate winners from losers. Inference infrastructure remains useful for 4-5 years. Training infrastructure obsolescence occurs in 18 months as new GPU generations deploy and training efficiency improves. Companies that invested $100+ billion in H100s for training workloads in 2024 face write-downs by 2026 as customers shift to inference and newer training hardware. Companies that build inference infrastructure see stable utilization across multi-year horizons.

The implications are clear. Companies with custom silicon and base utilization win on unit economics. Companies renting homogeneous GPU clusters face structural cost disadvantages that are hard to overcome.

Scenario Two: Training Bifurcates (20% probability)

Training splits into two distinct markets by 2027. Inference still grows to 60-65% of compute spending, but training doesn’t disappear - it bifurcates between frontier foundation models and distributed fine-tuning/adaptation.

Frontier models at the top - built by hyperscalers and AI labs with billions to spend. Fine-tuning at the bottom - done by enterprises on managed platforms with compliance and security. The middle goes away.

Anthropic exemplifies the first one, with Amodei signaling at DealBook that scaling laws will keep pushing compute needs for elite labs like his - amid 2026 IPO rumors that could fund further internalization or dedicated hyperscaler ties (e.g., their AWS Trainium deal).

The two training markets become:

Frontier foundation models: Increasingly internal to hyperscalers and well-funded AI labs. OpenAI committing $500B to Stargate (10 GW capacity), xAI building 200,000-GPU Colossus cluster in 122 days, Anthropic investing $50B in owned infrastructure - all suggest the largest players are internalizing rather than renting compute.

This directly undermines the neocloud addressable market. If OpenAI, Anthropic, and xAI build owned capacity, who rents massive GPU clusters?

Enterprise fine-tuning: Moves to managed platforms with existing enterprise relationships. Fine-tuning runs on smaller infrastructure, but requires tooling, security, and compliance that raw GPU rental doesn’t provide. Databricks, Snowflake, and cloud provider AI platforms capture this market through integration advantages, not infrastructure scale.

The moats that matter:

For frontier training: Scale and capital efficiency determine winners. The hyperscalers can build at massive scale, amortize costs across internal and external workloads, and price competitively. Companies dependent purely on external training customers (CoreWeave, Oracle cloud) compete for a tiny slice, perhaps 5-10 organizations globally that need 100,000+ GPU clusters but can’t justify building them. This isn’t a market large enough to support multiple specialized providers.

For enterprise fine-tuning: Platform integration becomes the competitive advantage. This isn’t “raw GPU rental” anymore - it’s “fine-tuning as a service” with comprehensive tooling, security, compliance, and ease of use. Microsoft wins through enterprise distribution (already using Azure and Office 365). AWS wins through breadth and ecosystem (widest range of model options, integration with existing AWS services). Google competes on AI/ML tooling sophistication.

Customer stickiness shifts from infrastructure to workflow: In the training-dominated world, customers choose providers based on GPU availability and interconnect speed. In the bifurcated world, frontier customers choose based on total cost of ownership and architectural fit (which favors hyperscalers and custom silicon). Enterprise fine-tuning customers choose based on platform integration and developer experience (which favors companies with existing enterprise relationships).

This bifurcation leaves no room for generalist GPU rental services. Too small for frontier, too inflexible for enterprise.

Scenario Three: Paradigm Shift (10% probability)

Post-transformer architectures fundamentally change compute requirements by 2027.

What if transformers weren’t the final answer? What if an arxiv paper next month shows that neurosymbolic architectures do everything transformers do, but 10x more efficiently, on different hardware?

Neurosymbolic AI, world models, liquid neural networks, or other novel approaches require different hardware profiles than current GPUs optimized for transformer operations.

Let’s take the example of neurosymbolic AI. Current GPUs are optimized for matrix operations in transformers, but neurosymbolic AI might favor hybrid processors that handle both parallel (neural) and sequential (symbolic) computations efficiently. Experts think this could shift demand toward neuromorphic chips (brain-inspired hardware like Intel’s Loihi) or FPGAs for flexible rule-based processing, making dense GPU clusters less relevant.

This low-probability scenario represents the highest uncertainty and the most dramatic competitive inversion. If the key architecture of AI changes and moves beyond transformers to something requiring different compute patterns, then existing infrastructure investments face potential obsolescence.

The moats that invert:

Sunk costs become liabilities, not assets. Companies with enormous investments in transformer-specific custom silicon face the highest switching costs. TPUs optimized for transformer inference, Trainium chips designed for transformer training patterns, MTIA accelerators tuned for transformer-based recommendations - all require fundamental redesign if the paradigm shifts. The advantage of early custom silicon investment becomes the disadvantage of institutional commitment to obsolete architectures.

Architectural lock-in reverses. Companies that created moats through architectural lock-in (Anthropic optimized for Google TPUs, models trained on AWS Trainium) face the highest migration costs. The technical interdependence that prevented switching becomes the impediment to adopting new paradigms. Google’s position with Anthropic shifts from advantage to liability if Anthropic’s models need migration to post-transformer architectures requiring entirely new chips.

Internal workloads become migration challenges. Companies with massive internal deployments (Google’s Search and YouTube on TPUs, Meta’s recommendations on MTIA) face the largest transition burdens. Every internal application optimized for transformer-based models requires reimplementation. The base utilization advantage becomes a base migration cost.

Generic infrastructure gains temporary advantage. Companies with less custom silicon investment and newer infrastructure programs face lower switching costs. Microsoft’s Maia and Cobalt silicon programs, being newer, have less institutional commitment to transformer-specific optimizations. Oracle and neoclouds dependent on NVIDIA face their own challenges (waiting 2-3 years for NVIDIA to develop appropriate accelerators), but at least they don’t have stranded custom silicon investments.

The selection mechanism:

The paradigm shift fundamentally reshuffles who has fitness for the new environment. But with only 10% probability, this scenario serves primarily as a reminder that current competitive advantages aren’t permanent. They’re contingent on the technological paradigm remaining stable.

The historical parallel: Intel’s dominance in x86 CPUs looked permanent until mobile computing shifted the paradigm to ARM architectures. Intel’s enormous investments in x86 optimization became liabilities when the environment changed. The same could happen to AI infrastructure if post-transformer paradigms emerge.

Separating Justified Investment from Stranded Assets: The Disclosure Gap

Wall Street’s skepticism about AI capital intensity stems from opacity. This brings us full circle to the original question: Which specific investments generate sustainable returns?

This is precisely where current disclosures fail. Without transparency into workload mix, customer concentration, custom silicon deployment, and depreciation assumptions, investors cannot determine which companies have favorable economics and which face structural challenges.

The right questions

Rather than asking “how much is being spent,” we should ask:

What workload mix will this infrastructure actually serve over its economic lifetime?

If 75%+ of compute shifts to inference but your infrastructure achieves only 0.4-33% utilization on inference workloads, depreciation schedules become irrelevant - the hardware is economically obsolete.

What switching costs exist to move workloads to better-matched infrastructure?

Models optimized for specific silicon during training can’t easily migrate. Google documents that workloads moving from GPUs to TPUs see 3.8-4x cost improvements - creating lock-in for whoever trains the model.

Who has architectural optionality versus architectural lock-in?

Hyperscalers building custom inference silicon (Google TPUs, AWS Trainium, Meta MTIA) can optimize for actual workload characteristics. Neoclouds renting homogeneous GPU clusters cannot.

What is the refinancing risk if asset values decline faster than expected?

Equity-financed infrastructure (hyperscalers) faces earnings volatility. Debt-financed infrastructure faces potential insolvency when collateral values fall below loan covenants.

This is what I think companies should disclose to respond:

Workload mix: Training vs. inference as % of total compute. Trend over 4-8 quarters. Breakdown by customer type (internal, enterprise, AI labs).

This single metric would reveal whether a company is building for growth markets (inference) or declining markets (training) if we are in scenario 1.

Custom silicon deployment: % of AI workloads running on custom vs. third-party silicon. Cost per compute unit comparison to alternatives. Depreciation schedules for custom silicon versus commodity GPUs.

This would quantify the cost advantages that create sustainable moats.

Customer concentration by workload: Top 10 customers as % of training revenue. Top 10 customers as % of inference revenue. Multi-year contract coverage and renewal rates.

This would distinguish concentrated risk from diversified revenue.

Depreciation assumptions by infrastructure type: Training infrastructure useful life and changes to estimates. Inference infrastructure useful life and rationale.

This would expose companies using optimistic depreciation schedules that obscure economic reality.

Architectural lock-in metrics: % of customer workloads optimized for company-specific infrastructure. Estimated switching costs for customers to migrate to alternative providers.

This would quantify competitive moats from technical interdependence.

You could then evaluate the $1.71 with actual understanding. Armed with such a breakdown, investors would be in a far stronger position to move past binary debates over whether or not hyperscalers are engaged in irrational exuberance. Instead, they could begin to identify which ones are developing rational, adaptive responses to a genuinely new environment. A new environment that won’t be static but will continue to rapidly evolve.

Not all capital is equal.

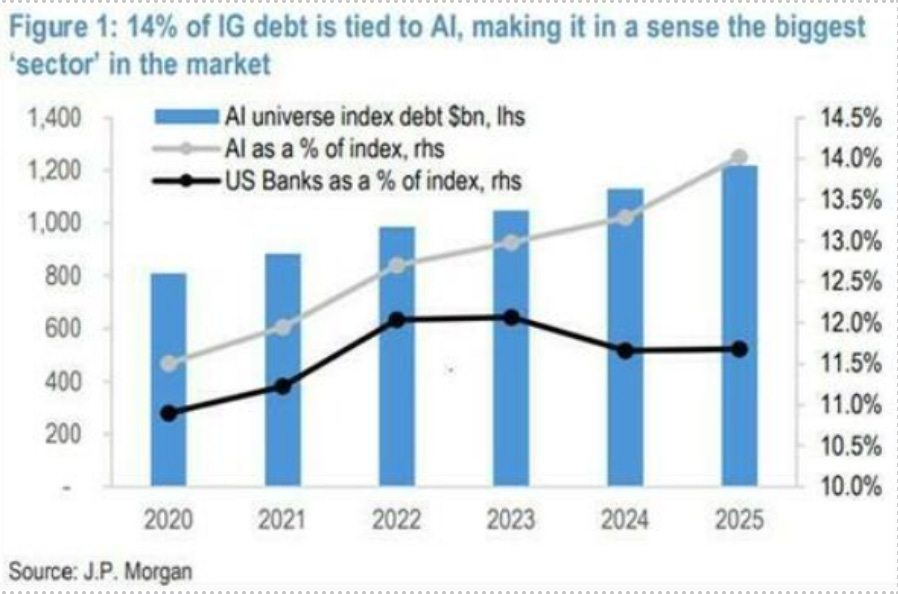

Also, the debt dimension cannot be ignored.

Figure 2 - % of IG debt tied to AI (source: J.P. Morgan)

For equity-financed infrastructure (Google, AWS, Meta, Microsoft), workload mismatch creates accounting corrections and earnings volatility - painful but manageable with billion cash positions.

For debt-financed infrastructure, the same mismatch creates potential insolvency when collateral values decline faster than loan amortization.

Now, even Meta and Google are taking out corporate bonds to pay for their data center expansions. Oracle is entering new territory, too, in its support of OpenAI, where it has $104 billion of debt as of August 2025, with plans to raise an additional $38 billion to fund AI data centers.

These are not typos.

And of course, now there is CoreWeave. The neocloud provider announced this week that it would sell $2 billion in convertible senior notes. The market reacted by driving its stock down almost 5% in intra-day trading.

The company had already been facing increasing pressure from Wall Street, anxious about the $18.8 billion debt it’s carrying on its balance sheet. The new debt carries the risk of diluting the stock. The company also said some money will be used for “capped call transactions,” a way of hedging against its own profits and losses.

Debt backed by training infrastructure with 18-month obsolescence cycles. The timing of the economic value is uncertain. Increasingly complex financial instruments are being used to fund this build-out. And a web of tight links bind the financial fate of a handful of AI and hyperscaler companies together.

It all points to a potentially massive systematic risk.

And yet, these are highly complex investments that boil down to fundamental questions: Is a company building the right infrastructure for the right workloads, serving the right customers, and leveraging the right competitive advantages?

Get that right, and $1.71 Capex per dollar of sales is sustainable.

Get it wrong, and even $0.71 leads to insolvency.

In 2027, we’ll know which is which.

Brilliant dissection of why aggregated CAPEX metrics completely miss the asset lifecycle mismatch. The training vs inference depreciation delta is the sleeper issue that'll expose which hyperscalers are building durable moats versus chasing utilization theater. CoreWeave's 6-year GPU life against 18-month refresh cycles is the canary, but most investors still dunno that custom silicon lock-in is fundamentally reshaping competitive dynamics inthe inference-dominant scenario.